Understanding AI's Learning Process: Implications For Responsible Application

Table of Contents

Types of AI Learning

AI systems learn through various methods, each with its strengths and weaknesses. Understanding these differences is key to selecting the appropriate learning approach for a given task and anticipating potential challenges.

Supervised Learning

In supervised learning, the AI model learns from labeled data, where each input data point is paired with the correct output. The model identifies patterns in the data and learns to map inputs to outputs.

-

Examples:

- Image recognition: Classifying images of cats and dogs by learning to associate image features with their respective labels.

- Spam detection: Identifying spam emails based on features like sender address, email content, and keywords.

- Medical diagnosis: Predicting diseases based on patient symptoms and medical history.

-

Challenges:

- Requires large amounts of accurately labeled data, which can be expensive and time-consuming to collect.

- Prone to bias if the training data reflects existing societal biases. For instance, a facial recognition system trained on primarily Caucasian faces may perform poorly on individuals from other ethnic backgrounds.

Unsupervised Learning

Unsupervised learning involves training an AI model on unlabeled data, allowing it to identify patterns and structures without explicit guidance. The goal is to discover hidden relationships and insights in the data.

-

Examples:

- Customer segmentation: Grouping customers into distinct segments based on purchasing behavior and demographics.

- Anomaly detection: Identifying unusual data points that deviate from the norm, such as fraudulent transactions or equipment malfunctions.

- Dimensionality reduction: Reducing the number of variables in a dataset while preserving important information.

-

Challenges:

- Interpreting the results of unsupervised learning can be challenging, as the model doesn't provide explicit explanations for its findings.

- There is less control over the learning process compared to supervised learning. The model might uncover unexpected patterns that are difficult to interpret or irrelevant to the task at hand.

Reinforcement Learning

Reinforcement learning involves training an AI agent to interact with an environment and learn through trial and error. The agent receives rewards for desirable actions and penalties for undesirable actions, learning to maximize its cumulative reward.

-

Examples:

- Game playing: Training AI agents to play games like chess, Go, or video games at a superhuman level.

- Robotics: Training robots to perform complex tasks in physical environments, such as navigating a maze or assembling objects.

- Resource management: Optimizing the allocation of resources in a dynamic environment.

-

Challenges:

- Requires careful design of the reward function, as poorly designed rewards can lead to unexpected and undesirable behavior.

- Can be computationally expensive and time-consuming to train.

- Potential for unintended consequences if the reward function doesn't fully capture the desired behavior.

Bias in AI Learning

Bias in AI systems is a significant concern, with the potential to perpetuate and amplify existing societal inequalities. Understanding the sources and mitigation strategies for bias is crucial for responsible AI development.

Data Bias

Data bias refers to biases present in the training data used to train AI models. These biases can lead to unfair or discriminatory outcomes.

-

Examples:

- Facial recognition systems exhibiting higher error rates for individuals with darker skin tones.

- Loan applications being unfairly rejected based on demographic data that reflects historical biases.

- Recruitment tools that disproportionately favor male candidates.

-

Mitigation Strategies:

- Careful data collection and curation to ensure representative datasets.

- Bias detection and mitigation techniques, such as re-weighting samples or using adversarial training.

- Auditing datasets for potential biases before training AI models.

Algorithmic Bias

Algorithmic bias refers to biases that are introduced through the design of the algorithms themselves, independent of the training data.

-

Examples:

- Algorithms that inadvertently favor certain groups over others due to design choices or assumptions.

- Algorithms that amplify existing biases in the data, leading to even more discriminatory outcomes.

-

Mitigation Strategies:

- Algorithmic auditing to identify and address potential biases in algorithms.

- Development of fairness-aware algorithms that explicitly incorporate fairness constraints.

- Transparency and explainability in algorithm design.

The Ethical Implications of AI Learning

The ethical implications of AI learning are far-reaching and require careful consideration. Addressing these challenges is crucial for ensuring that AI benefits society as a whole.

Transparency and Explainability

Understanding how an AI model arrives at a decision is critical for accountability and trust. Many complex AI models, however, are "black boxes," making it difficult to understand their decision-making processes.

- Challenges: The inherent complexity of deep learning models makes interpretation difficult.

- Solutions: Explainable AI (XAI) techniques aim to make AI models more transparent and understandable. This includes methods for visualizing model internals, generating explanations for individual predictions, and developing simpler, more interpretable models.

Accountability and Responsibility

Determining who is responsible when an AI system makes a mistake is a significant ethical challenge. This involves distributing responsibility among developers, users, and other stakeholders.

- Challenges: Establishing clear lines of responsibility can be difficult, especially for complex systems with multiple contributing factors.

- Solutions: Clear guidelines and regulations are needed to establish accountability. Ethical frameworks for AI development and deployment are also essential.

Privacy and Security

AI systems often process sensitive personal data, raising significant privacy and security concerns.

- Challenges: Protecting data from unauthorized access and misuse. Data breaches can have severe consequences for individuals and organizations.

- Solutions: Data anonymization techniques, robust security measures, and strong data governance practices are needed to protect privacy and security.

Conclusion

Understanding the AI learning process is paramount for its responsible application. By recognizing the different learning paradigms, addressing potential biases, and confronting ethical implications, we can harness the transformative power of AI while minimizing its risks. The development and deployment of AI systems must prioritize transparency, accountability, and fairness. Continued research and collaboration are essential to ensure that AI benefits all of humanity. Let's work together to build a future where the AI learning process is utilized ethically and responsibly, paving the way for a more equitable and prosperous society. Embrace responsible AI learning practices and help shape the future of AI.

Featured Posts

-

Jaime Munguias Rematch Victory Strategic Adjustments Pay Off Against Bruno Surace

May 31, 2025

Jaime Munguias Rematch Victory Strategic Adjustments Pay Off Against Bruno Surace

May 31, 2025 -

Banksy Auction Iconic Broken Heart Wall On Sale

May 31, 2025

Banksy Auction Iconic Broken Heart Wall On Sale

May 31, 2025 -

Empanadas De Jamon Y Queso Sin Horno Receta Facil Y Rica

May 31, 2025

Empanadas De Jamon Y Queso Sin Horno Receta Facil Y Rica

May 31, 2025 -

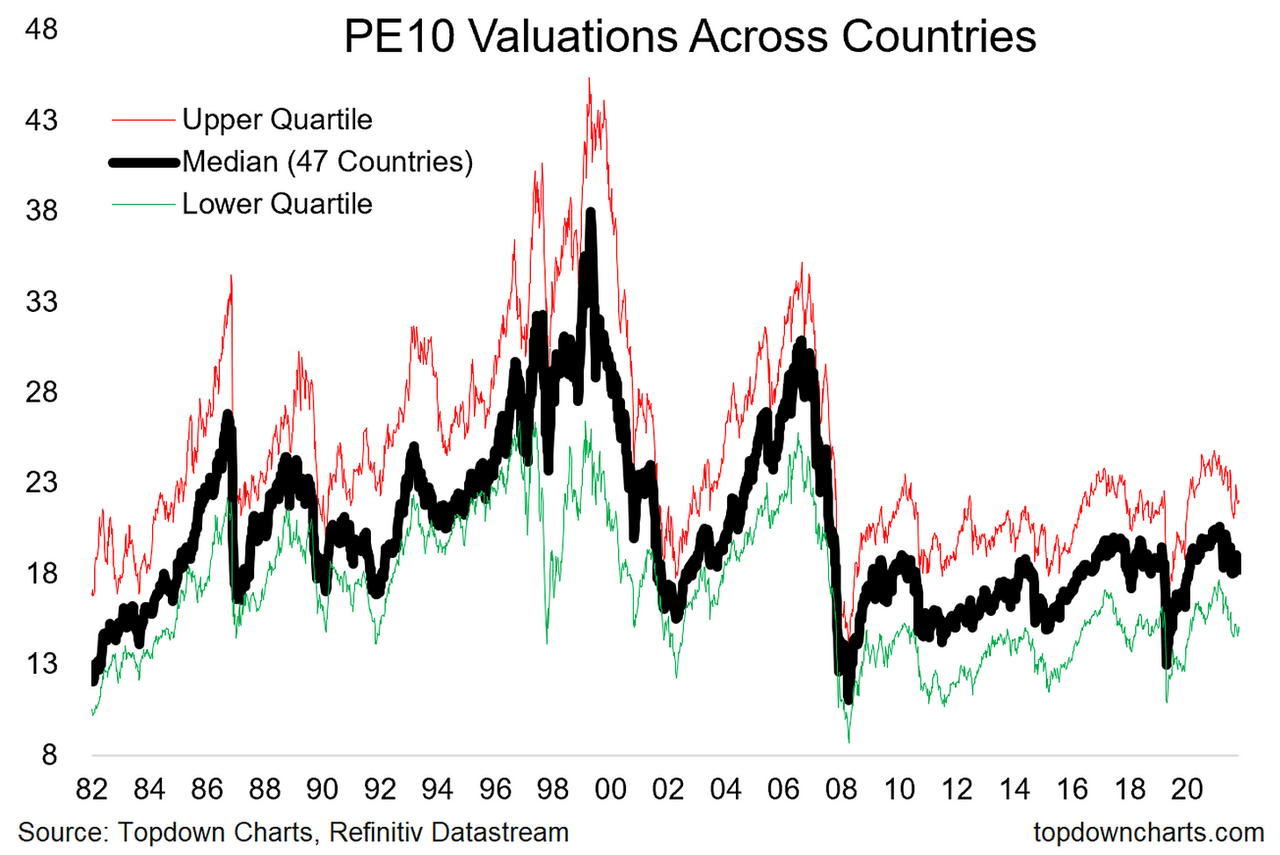

Stock Market Valuations Bof As Reassuring Argument For Investors

May 31, 2025

Stock Market Valuations Bof As Reassuring Argument For Investors

May 31, 2025 -

Djokovic In Tarihi Zirvesi Tenis Duenyasinda Bir Ilk

May 31, 2025

Djokovic In Tarihi Zirvesi Tenis Duenyasinda Bir Ilk

May 31, 2025