The Clash Over AI: Trump Administration Vs. European Union Regulations

Table of Contents

The Trump Administration's Approach to AI: A Laissez-Faire Stance

The Trump administration's approach to AI regulation can be characterized as largely laissez-faire. This preference for minimal government intervention stemmed from a belief that fostering innovation and economic growth through deregulation was paramount.

Limited Federal Intervention

The administration largely prioritized self-regulation by the industry, believing that excessive government oversight would stifle technological advancement.

- Few specific federal AI regulations were implemented. The focus remained on existing frameworks applicable to various aspects of AI development, rather than creating new, comprehensive AI-specific legislation.

- Emphasis on industry self-regulation and market forces. The belief was that competition and market pressures would drive responsible AI development and deployment.

- Arguments against stringent regulations highlighted the potential to hinder innovation and competitiveness. The concern was that burdensome regulations could stifle the US's ability to remain a global leader in AI.

Keywords: AI deregulation, US AI policy, innovation, economic growth, limited regulation, self-regulation.

Focus on National Security and Economic Competitiveness

While advocating for limited regulation, the Trump administration recognized the critical role of AI in national security and economic competitiveness.

- Initiatives focused on AI's application in defense and national security. This included funding research and development in areas like autonomous weapons systems and AI-powered intelligence gathering.

- Strategic investments in AI research and development. Funding was channeled towards universities and research institutions to bolster the US's capabilities in AI.

- Strategies to counter other nations' AI advancements. The administration sought to maintain US technological leadership through investments and strategic partnerships.

Keywords: AI national security, AI economic competitiveness, US AI leadership, AI defense applications, AI investment.

The European Union's Approach to AI: A Risk-Based Regulatory Framework

In stark contrast to the US's largely hands-off approach, the European Union has adopted a far more proactive and risk-based regulatory framework for AI. This approach prioritizes ethical considerations and data privacy.

The GDPR's Influence on AI Regulation

The General Data Protection Regulation (GDPR), enacted in 2018, has profoundly influenced the EU's approach to AI regulation. Data privacy is central to their concerns.

- GDPR principles like data minimization and purpose limitation are crucial for responsible AI development. The use of personal data in AI systems must be justified, limited, and transparent.

- Strong emphasis on obtaining informed user consent for data processing. Users must understand how their data is being used in AI systems and have the right to withdraw consent.

- Accountability mechanisms ensure that organizations using AI comply with data protection regulations. Data controllers are responsible for demonstrating compliance and addressing potential breaches.

Keywords: GDPR, AI data privacy, EU AI regulation, data protection, user consent, ethical AI, data minimization.

Emphasis on Ethical Considerations and Transparency

The EU's approach emphasizes ethical considerations and transparency in AI systems.

- The proposed AI Act aims to establish a comprehensive regulatory framework for AI. This includes risk-based classification of AI systems and specific requirements for high-risk applications.

- Focus on explainability and accountability of AI algorithms. There's a strong push to ensure that AI systems are transparent and their decision-making processes can be understood.

- Mitigation of bias and discrimination in AI systems is a key objective. The EU aims to prevent AI from perpetuating or exacerbating existing societal inequalities.

Keywords: Ethical AI, AI transparency, AI accountability, AI bias, EU AI Act, responsible AI, explainable AI.

A Differentiated Approach Based on Risk Levels

The EU's regulatory framework differentiates AI systems based on their level of risk, applying varying regulatory measures accordingly.

- High-risk AI systems, such as those used in healthcare or law enforcement, are subject to stricter regulations. This includes rigorous testing, certification, and ongoing monitoring.

- Lower-risk AI systems face less stringent requirements. The approach is proportionate to the potential harm that could arise from the AI system's use.

- This risk-based approach aims to ensure the safety and reliability of AI systems while promoting innovation in less risky areas.

Keywords: AI risk assessment, AI risk management, AI safety, AI reliability, AI classification, high-risk AI, low-risk AI.

The Clash and Its Global Implications

The contrasting philosophies of the Trump administration and the EU represent a fundamental clash in approaches to AI regulation. This has significant global implications.

- Different regulatory frameworks create challenges for international trade and cooperation. Companies face complexities in complying with diverse regulations across jurisdictions.

- The divergence could influence the trajectory of global AI leadership. The choice between fostering rapid innovation versus prioritizing ethical considerations will have lasting impacts.

- The global adoption of ethical standards for AI is hampered by differing regulatory approaches. A unified global framework for ethical AI development is needed.

Keywords: Global AI regulation, international AI cooperation, AI standards, AI trade, AI ethics, global AI leadership.

Conclusion

The contrasting approaches of the Trump administration and the EU to AI regulation highlight a fundamental tension between the pursuit of rapid technological advancement and the need for ethical considerations and robust safeguards. The EU’s risk-based approach prioritizes data privacy, transparency, and accountability, while the Trump administration favored a more hands-off approach focused on fostering innovation and national competitiveness. This clash has profound implications for the future of AI development, global technological standards, and international cooperation. The evolving landscape of AI regulation demands ongoing discussion and engagement. We urge readers to explore the EU's AI Act proposal and reports on US AI policy to further understand the complexities of AI regulation and its crucial role in shaping a responsible and ethical future for AI. Understanding the nuances of AI regulation is crucial for businesses and policymakers alike. The future of AI depends on finding a balance between innovation and responsible development, and informed participation in the conversation is key.

Featured Posts

-

Stock Market Today Dow Futures Fluctuate Chinas Economic Support Amid Trade Tensions

Apr 26, 2025

Stock Market Today Dow Futures Fluctuate Chinas Economic Support Amid Trade Tensions

Apr 26, 2025 -

Analyst Reaction To Abb Vie Abbv Q Quarter Earnings Positive Outlook On Growth

Apr 26, 2025

Analyst Reaction To Abb Vie Abbv Q Quarter Earnings Positive Outlook On Growth

Apr 26, 2025 -

California Governor Newsom Condemns Internal Democratic Toxicity

Apr 26, 2025

California Governor Newsom Condemns Internal Democratic Toxicity

Apr 26, 2025 -

Newsoms Bannon Podcast Appearance Draws Sharp Criticism From Former Gop Representative

Apr 26, 2025

Newsoms Bannon Podcast Appearance Draws Sharp Criticism From Former Gop Representative

Apr 26, 2025 -

Access To Birth Control The Impact Of Over The Counter Availability Post Roe

Apr 26, 2025

Access To Birth Control The Impact Of Over The Counter Availability Post Roe

Apr 26, 2025

Latest Posts

-

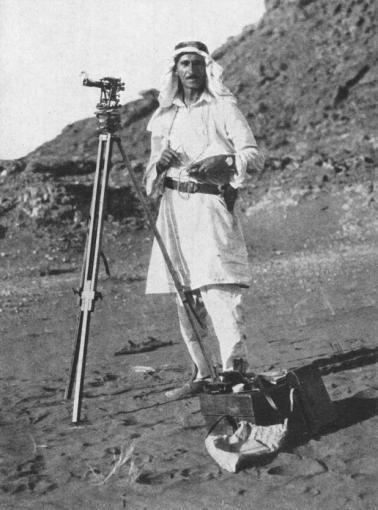

The Unlikely Path Of Ahmed Hassanein Could He Be The First Egyptian In The Nfl

Apr 26, 2025

The Unlikely Path Of Ahmed Hassanein Could He Be The First Egyptian In The Nfl

Apr 26, 2025 -

Ahmed Hassanein An Egyptians Path To The Nfl Draft

Apr 26, 2025

Ahmed Hassanein An Egyptians Path To The Nfl Draft

Apr 26, 2025 -

Is Ahmed Hassanein Egypts Next Nfl Star A Look At His Draft Prospects

Apr 26, 2025

Is Ahmed Hassanein Egypts Next Nfl Star A Look At His Draft Prospects

Apr 26, 2025 -

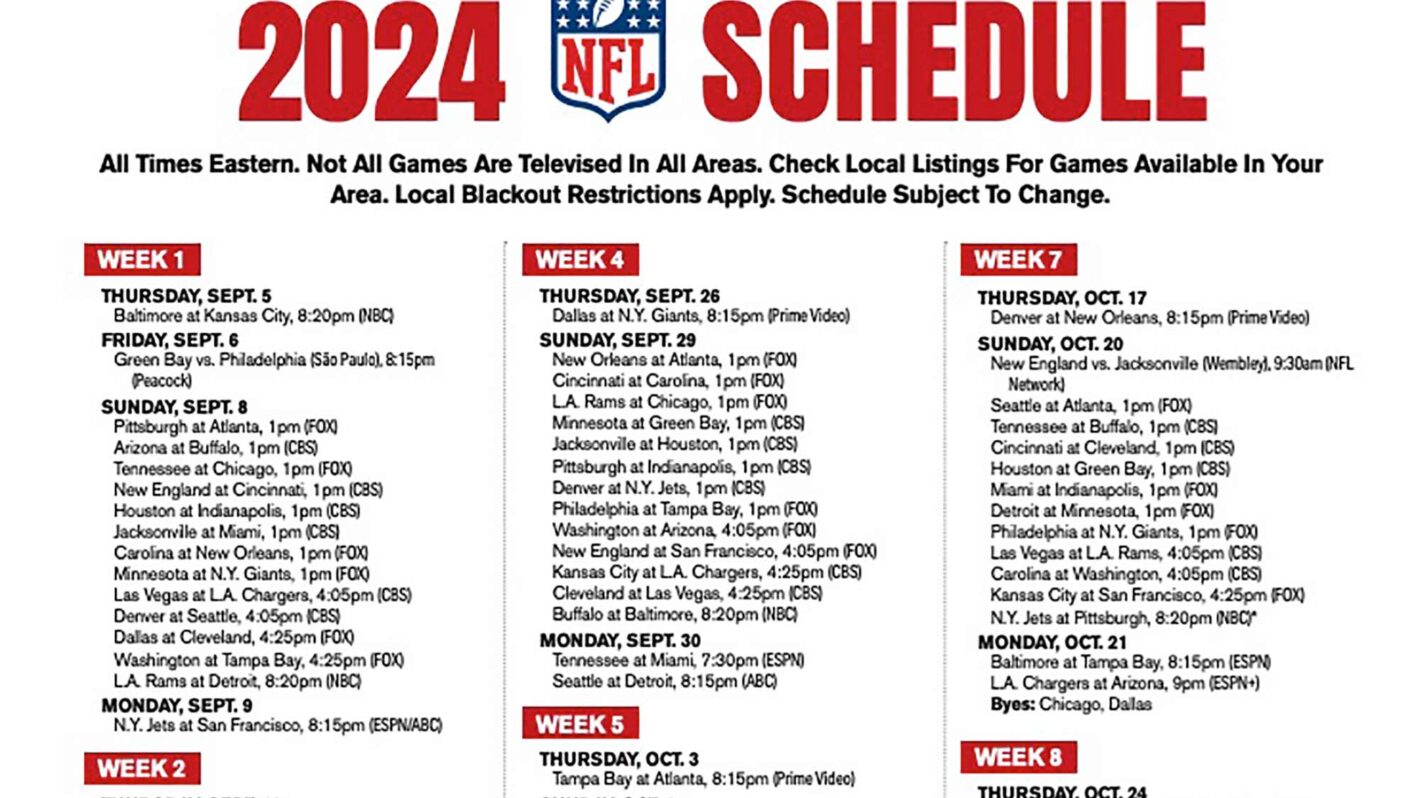

Thursday Night Football Nfl Drafts First Round Begins In Green Bay

Apr 26, 2025

Thursday Night Football Nfl Drafts First Round Begins In Green Bay

Apr 26, 2025 -

Will Ahmed Hassanein Break Barriers As Egypts First Nfl Draft Selection

Apr 26, 2025

Will Ahmed Hassanein Break Barriers As Egypts First Nfl Draft Selection

Apr 26, 2025