Reddit's Tougher Stance On Upvotes For Violent Content

Table of Contents

Understanding Reddit's Revised Content Policy Regarding Violence

Reddit's previous approach to violent content relied heavily on user reports and reactive moderation. While rules existed against violent content, enforcement was inconsistent, and the upvote system often inadvertently amplified harmful material. The platform's algorithm, designed to prioritize popular content, inadvertently boosted the visibility of violent posts, even if they violated community guidelines.

The recent changes represent a significant shift. Reddit is now actively working to de-emphasize the role of upvotes in determining the visibility of violent content. This means that even if a violent post receives many upvotes, the algorithm will be less likely to promote it to a wider audience. The platform is also investing more heavily in proactive content moderation, utilizing a combination of automated tools and human moderators to identify and remove harmful content before it gains significant traction.

- Specific examples of violent content now being targeted: This includes graphic depictions of violence, glorification of violence, threats of violence, and content that incites violence against individuals or groups.

- Clarification on what constitutes "violent content" under the new guidelines: Reddit has provided clearer definitions and examples to help users and moderators understand what constitutes a violation. Ambiguity has been reduced to improve consistency in enforcement.

- Mention of any changes in reporting mechanisms for violent content: Reporting mechanisms have been streamlined, making it easier for users to flag potentially violent content for review by moderators.

The Impact of Upvotes on the Visibility of Violent Content

Previously, the upvote system on Reddit acted as a powerful amplifier for violent content. Posts with a high number of upvotes were pushed higher in search results and subreddit feeds, increasing their visibility and reach. This often created a feedback loop, where the popularity of a violent post led to even more upvotes, further amplifying its reach and potentially normalizing or even encouraging such behavior.

Reddit's algorithm, while designed to promote engaging content, inadvertently contributed to this problem. The algorithm's emphasis on popularity meant that violent content, if upvoted enough, could quickly gain widespread visibility, potentially exposing vulnerable users to harmful material.

- Examples of how upvoted violent content gained traction: Certain subreddits, despite having rules against violent content, have historically seen violent posts gain significant traction due to upvotes.

- Discussion of the "echo chamber" effect: Upvoted violent content can create echo chambers, where like-minded individuals reinforce each other's views, potentially leading to online radicalization and the spread of extremist ideologies.

- Analysis of the potential for normalization or desensitization: Repeated exposure to violent content, particularly when amplified by upvotes and algorithmic promotion, can lead to normalization and desensitization, potentially having real-world consequences.

Challenges and Criticisms of Reddit's New Approach

Implementing Reddit's new policy presents significant challenges. Accurately and consistently identifying violent content across the platform's vast network of subreddits is a complex task. Human moderators face immense workloads, and automated systems can struggle with nuance and context, potentially leading to both false positives and false negatives.

Critics have raised concerns about potential censorship and limitations on free speech. The line between acceptable content and violent content can be blurry, and the risk of biased moderation is a legitimate concern. Some users argue that the new policy is overly restrictive and infringes upon their ability to express themselves freely.

- Difficulties in consistently identifying and removing violent content: The sheer volume of content uploaded to Reddit daily makes consistent moderation a monumental task.

- Concerns about the potential for bias in moderation: There are concerns that human moderators might inadvertently introduce bias into their decisions, leading to inconsistencies in enforcement.

- User feedback and reactions to the policy change: User feedback has been mixed, with some praising the changes and others expressing concerns about censorship and overreach.

The Future of Content Moderation on Reddit and Similar Platforms

Reddit's stricter stance on upvotes for violent content has broader implications for other online platforms. It highlights the ongoing debate about the responsibility of social media companies in combating online violence. Many platforms are grappling with similar challenges, and Reddit's experience provides valuable lessons and potential models for future strategies.

The role of AI and machine learning in content moderation is likely to increase. While these technologies have limitations, they can assist human moderators in identifying and flagging potentially harmful content, improving efficiency and consistency. However, ongoing dialogue between platforms, users, and policymakers remains crucial to ensure that content moderation strategies are both effective and respectful of fundamental rights.

- Potential for similar policy changes on other platforms: Other social media platforms are likely to follow suit, implementing similar strategies to curb the spread of violent content.

- The role of AI and machine learning in content moderation: AI can help automate some aspects of moderation, but human oversight will remain crucial.

- The need for ongoing dialogue between platforms, users, and policymakers: Effective content moderation requires collaboration and ongoing refinement of policies and strategies.

Conclusion

This article has explored Reddit's stricter approach to handling upvotes on violent content, highlighting the revised policy, its impact on content visibility, the challenges faced, and its wider implications for online platforms. The changes represent a significant shift in Reddit's content moderation strategy, reflecting a growing awareness of the potential harms of unchecked online violence. Balancing freedom of expression with the need to protect users from harmful content remains a complex challenge.

Call to Action: Stay informed about Reddit's evolving content policy regarding violent content and participate in constructive discussions about the future of online safety and responsible platform governance. Understanding Reddit’s approach to managing violent content and its use of upvotes is crucial for navigating the platform safely and contributing to a healthier online environment.

Featured Posts

-

Taylor Swifts Eras Tour Costume Close Ups And Fashion Inspiration

May 18, 2025

Taylor Swifts Eras Tour Costume Close Ups And Fashion Inspiration

May 18, 2025 -

Dry Weather Could Douse Easter Bonfire Plans

May 18, 2025

Dry Weather Could Douse Easter Bonfire Plans

May 18, 2025 -

Reddits Tougher Stance On Upvotes For Violent Content

May 18, 2025

Reddits Tougher Stance On Upvotes For Violent Content

May 18, 2025 -

10 Rokiv Rekordiv Teylor Svift Ta Yiyi Fenomenalni Prodazhi Vinilu

May 18, 2025

10 Rokiv Rekordiv Teylor Svift Ta Yiyi Fenomenalni Prodazhi Vinilu

May 18, 2025 -

Groeiende Ondersteuning Voor Uitbreiding Van De Nederlandse Defensie Industrie

May 18, 2025

Groeiende Ondersteuning Voor Uitbreiding Van De Nederlandse Defensie Industrie

May 18, 2025

Latest Posts

-

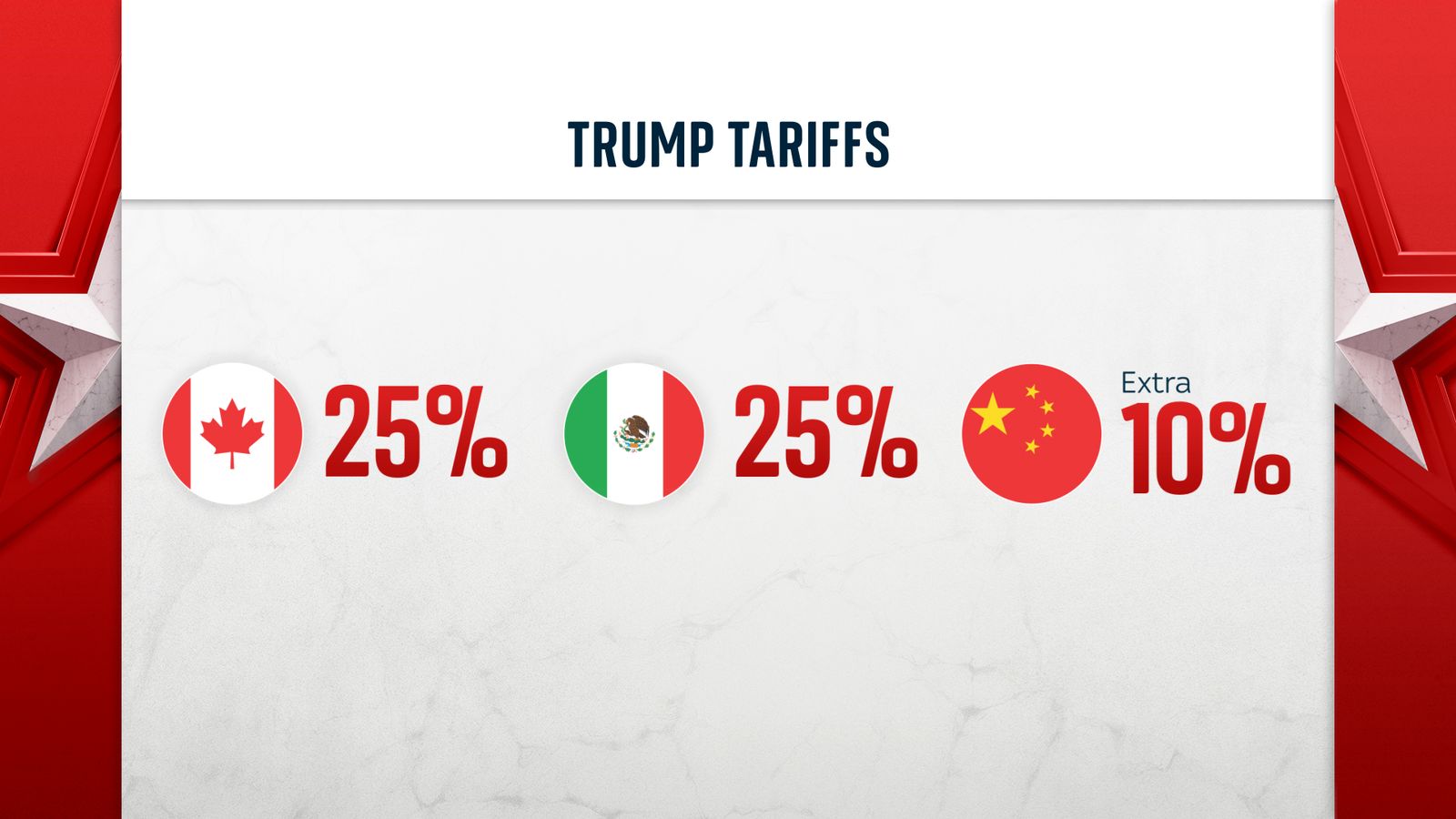

Dutch Viewpoint Against Eu Countermeasures To Trumps Tariffs

May 18, 2025

Dutch Viewpoint Against Eu Countermeasures To Trumps Tariffs

May 18, 2025 -

The Netherlands And The Trump Tariffs A Nations Resistance To Retaliation

May 18, 2025

The Netherlands And The Trump Tariffs A Nations Resistance To Retaliation

May 18, 2025 -

Analysis Dutch Opposition To Eu Response To Trump Import Duties

May 18, 2025

Analysis Dutch Opposition To Eu Response To Trump Import Duties

May 18, 2025 -

Public Opinion In The Netherlands No Retaliation On Trump Tariffs

May 18, 2025

Public Opinion In The Netherlands No Retaliation On Trump Tariffs

May 18, 2025 -

Poll Most Dutch Against Eu Retaliation On Trumps Import Tariffs

May 18, 2025

Poll Most Dutch Against Eu Retaliation On Trumps Import Tariffs

May 18, 2025