Practical Compliance With The Latest CNIL AI Model Guidelines

Table of Contents

Understanding the CNIL's Approach to AI Regulation

The CNIL's approach to AI regulation is rooted in its broader mandate to protect fundamental rights and freedoms in the digital age. Their philosophy emphasizes a human-centric approach, prioritizing individual rights and ensuring that AI systems are developed and used ethically and responsibly. This approach is reflected in their focus on several key areas:

-

Focus on a risk-based approach: The CNIL doesn't impose blanket restrictions on AI. Instead, their guidelines emphasize a risk-based approach, tailoring regulatory requirements to the specific risks posed by different AI systems. High-risk AI systems, such as those used in healthcare or criminal justice, face stricter scrutiny than low-risk applications.

-

Emphasis on explainability and accountability: The CNIL strongly advocates for explainable AI (XAI), requiring developers to provide clear explanations of how their AI systems work and the factors influencing their decisions. This focus on transparency ensures accountability and allows individuals to understand and challenge AI-driven outcomes.

-

Importance of user rights and data protection (GDPR compliance): The CNIL's AI guidelines are deeply intertwined with the General Data Protection Regulation (GDPR). They emphasize the importance of respecting user rights, including the right to access, rectification, and erasure of data used by AI systems. Data protection remains paramount throughout the lifecycle of AI systems.

-

Guidance on algorithmic bias mitigation: The CNIL recognizes the potential for algorithmic bias to perpetuate and amplify existing societal inequalities. Their guidelines provide crucial guidance on identifying, mitigating, and monitoring for bias in AI systems, ensuring fairness and non-discrimination.

Key Requirements of the CNIL AI Model Guidelines

The CNIL's AI model guidelines outline several key requirements that businesses must meet to ensure compliance. These requirements cover various stages of the AI lifecycle, from data collection to deployment and ongoing monitoring.

-

Data governance and quality: High-quality data is fundamental to responsible AI. The CNIL recommends using data anonymization, pseudonymization, and data minimization techniques to protect individual privacy. Their recommendations often emphasize the importance of diverse and representative datasets to mitigate bias. Specific guidance on dataset composition is available in their publications.

-

Model design and development: The guidelines emphasize the importance of using Explainable AI (XAI) techniques to make AI decision-making transparent and understandable. This includes employing bias detection and mitigation strategies throughout the model development process, as well as rigorous model validation procedures to ensure accuracy and reliability. Refer to the CNIL's website for links to relevant documentation on these processes.

-

Transparency and information to users: Businesses must provide clear and accessible explanations of how their AI systems work and their potential impact on individuals. This is crucial for fulfilling transparency obligations under both the CNIL guidelines and the GDPR. Users have a right to understand how AI systems process their data and the potential consequences.

-

Human oversight and control: The CNIL stresses the importance of human oversight, particularly for high-risk AI systems. This includes establishing procedures for human review of AI decisions, especially when those decisions have significant consequences for individuals. Examples of appropriate oversight mechanisms might include regular audits or human-in-the-loop systems.

-

Security and incident management: Robust security measures are essential to protect AI systems and the data they process from unauthorized access, use, disclosure, disruption, modification, or destruction. The CNIL guidelines recommend implementing comprehensive security measures and incident response plans to address potential security breaches.

Practical Steps for Compliance with CNIL AI Model Guidelines

Successfully complying with the CNIL AI Model Guidelines requires a proactive and multi-faceted approach. Here are some actionable steps businesses can take:

-

Conduct a thorough AI risk assessment: Identify potential risks associated with your AI systems, considering factors such as data sensitivity, the potential for bias, and the impact of AI decisions on individuals.

-

Implement a data governance framework aligned with CNIL guidelines: Establish clear processes for data collection, storage, processing, and disposal, ensuring compliance with data protection principles.

-

Develop and document a model development lifecycle that incorporates bias mitigation and explainability: Document the entire process, from data acquisition to model deployment and monitoring, including methods for bias detection and mitigation and strategies for ensuring explainability.

-

Establish procedures for human oversight and control of AI systems: Define clear roles and responsibilities for human review of AI decisions, especially in high-risk contexts.

-

Implement robust security measures and incident response plans: Protect your AI systems and data with appropriate security measures and develop a plan to respond effectively to security incidents.

-

Train personnel on CNIL AI guidelines and data protection regulations: Ensure your employees understand their responsibilities under the guidelines and are equipped to handle data protection issues.

-

Document all compliance efforts: Maintain comprehensive documentation of all your compliance activities to demonstrate your commitment to responsible AI development and use.

Utilizing Data Protection Impact Assessments (DPIAs)

For high-risk AI systems, conducting a Data Protection Impact Assessment (DPIA) is crucial for demonstrating compliance with CNIL guidelines.

-

When is a DPIA required? A DPIA is typically required when an AI system processes sensitive personal data or involves high-risk automated decision-making.

-

Steps involved in conducting a DPIA: A DPIA involves identifying the data processed, assessing the risks associated with processing that data, and implementing appropriate mitigation measures.

-

How to address identified risks in a DPIA: Once risks are identified, the DPIA should outline specific measures to mitigate those risks, such as implementing data anonymization techniques or strengthening security measures.

-

Relating DPIA findings back to specific CNIL recommendations: The DPIA should demonstrate how the mitigation measures implemented address the specific recommendations outlined in the CNIL's guidelines.

Staying Updated with Evolving CNIL Guidelines

The field of AI is constantly evolving, and so are the regulatory frameworks governing its use. Staying updated with the latest CNIL guidelines is essential for maintaining compliance.

-

Regularly checking the CNIL website for updates and publications: The CNIL's website is the primary source for information on their guidelines and publications.

-

Subscribing to CNIL newsletters and alerts: Subscribe to receive updates on new guidelines, publications, and announcements.

-

Participating in CNIL consultations and workshops: Engage with the CNIL by participating in consultations and workshops to share your feedback and gain insights.

-

Engaging with CNIL experts for guidance: Don't hesitate to contact CNIL experts for guidance on specific compliance questions.

Conclusion

Successfully complying with the CNIL AI model guidelines is paramount for organizations using AI in France. By understanding the key requirements, implementing practical steps, and staying updated on evolving regulations, businesses can ensure responsible AI development and deployment. Remember that proactive compliance with the CNIL AI model guidelines not only mitigates legal risks but also fosters trust and builds a positive reputation. To learn more about specific aspects of CNIL AI compliance, consult the official CNIL website and seek expert advice to ensure your organization's AI initiatives remain compliant. Don't hesitate to reach out for further guidance on navigating the intricacies of French AI regulation and achieving seamless CNIL AI model guidelines compliance.

Featured Posts

-

Cruise Line Bans For Complaints What You Need To Know

Apr 30, 2025

Cruise Line Bans For Complaints What You Need To Know

Apr 30, 2025 -

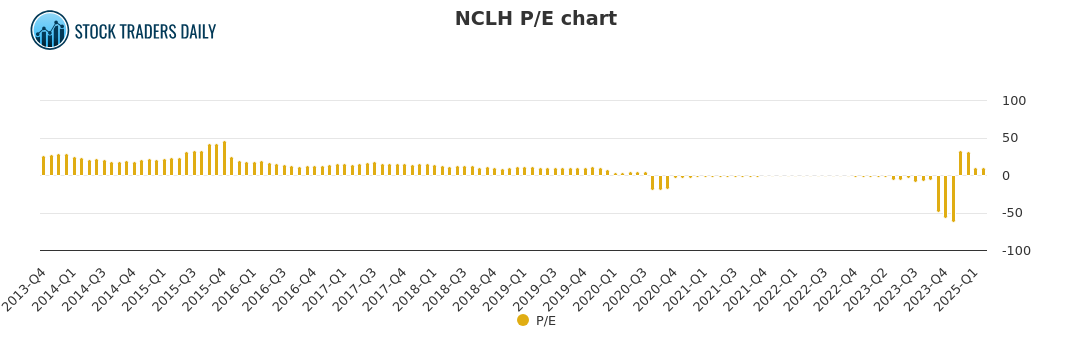

Norwegian Cruise Line Nclh A Hedge Fund Perspective On Stock Investment

Apr 30, 2025

Norwegian Cruise Line Nclh A Hedge Fund Perspective On Stock Investment

Apr 30, 2025 -

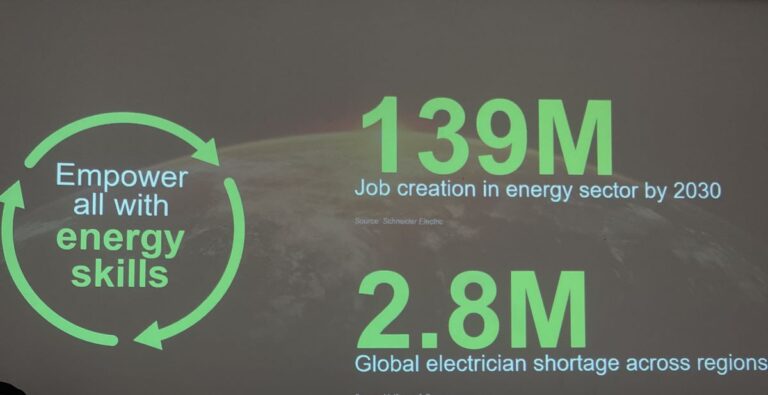

Schneider Electrics Commitment To Accelerating Womens Careers In Nigeria For Iwd

Apr 30, 2025

Schneider Electrics Commitment To Accelerating Womens Careers In Nigeria For Iwd

Apr 30, 2025 -

Eptk 2025

Apr 30, 2025

Eptk 2025

Apr 30, 2025 -

Hostage Crisis Argamanis Powerful Call To Action At Time Magazine

Apr 30, 2025

Hostage Crisis Argamanis Powerful Call To Action At Time Magazine

Apr 30, 2025

50 Godini Praznuva Lyubimetst Na Milioni

50 Godini Praznuva Lyubimetst Na Milioni