Investigating The Link Between Algorithmic Radicalization And Mass Shootings

Table of Contents

H2: The Role of Social Media Algorithms in Echo Chambers and Filter Bubbles

Social media algorithms, designed to maximize user engagement, often inadvertently create echo chambers and filter bubbles. These algorithms curate content based on users' past interactions, preferences, and online behavior, selectively showing them information that aligns with their existing beliefs. While seemingly benign, this personalization can have profound consequences. Individuals exposed primarily to like-minded perspectives within these echo chambers are less likely to encounter counterarguments or diverse viewpoints. This reinforcement of pre-existing beliefs can be particularly dangerous when dealing with extremist ideologies.

-

Examples of algorithms promoting extreme content: Numerous reports have documented how YouTube's recommendation engine, for instance, has led users down rabbit holes of extremist content, including videos promoting violence and hate speech. Similar issues have been observed on other platforms like Facebook and Twitter.

-

The impact of personalized recommendations on radicalization: Studies suggest that personalized recommendations significantly contribute to online radicalization by creating a sense of belonging and validation within extremist communities. The constant exposure to radicalized content can gradually desensitize individuals and normalize extremist views.

-

Case studies demonstrating the effect of echo chambers on individuals involved in mass shootings: While establishing a direct causal link remains challenging, several investigations into the online activities of individuals involved in mass shootings have revealed their immersion in echo chambers and exposure to extremist content through algorithms. These cases underscore the potential for algorithmic personalization to contribute to the radicalization process.

H2: Algorithmic Amplification of Hate Speech and Conspiracy Theories

Algorithms not only curate content but also amplify it. The design of many social media platforms prioritizes content that generates high engagement, often regardless of its veracity or harmful nature. This leads to the unintentional (or sometimes intentional) amplification of hate speech and conspiracy theories, which can rapidly spread throughout online networks. The sheer speed and reach of algorithmic amplification dramatically increase the potential for these narratives to incite violence.

-

Examples of algorithms promoting harmful content: The rapid spread of misinformation and disinformation campaigns, often fueled by bots and automated accounts, demonstrates the power of algorithms to disseminate harmful content at an unprecedented scale.

-

The role of bots and automated accounts in spreading extremist narratives: Automated accounts can create the illusion of widespread support for extremist ideologies, further normalizing and reinforcing these views. This coordinated amplification dramatically increases the reach and impact of hate speech and conspiracy theories.

-

The challenges of content moderation and algorithm design in addressing this issue: The scale and speed of online information sharing pose significant challenges to content moderation efforts. Developing algorithms that effectively identify and suppress harmful content without infringing on freedom of speech remains a significant technological and ethical hurdle.

H2: The Impact of Online Communities and Forums on Radicalization

Online communities and forums can serve as breeding grounds for extremist ideologies, providing spaces for like-minded individuals to connect, share information, and reinforce their beliefs. These online spaces often facilitate a process of online grooming and radicalization, where individuals are gradually exposed to increasingly extreme content and encouraged to participate in violent acts.

-

Examples of online forums linked to extremist activity: Several online forums and platforms have been identified as hubs for extremist activity, serving as recruiting grounds and centers for planning and coordinating violent acts.

-

The role of anonymity and online pseudonyms in fostering radicalization: The anonymity afforded by the internet can embolden individuals to express extreme views they might otherwise hesitate to share publicly. Online pseudonyms further shield individuals from accountability and facilitate a sense of detachment from the real-world consequences of their actions.

-

Strategies used by extremist groups to recruit and radicalize individuals online: Extremist groups employ sophisticated strategies to target vulnerable individuals online, using personalized messaging, emotional appeals, and gradual exposure to radical ideologies to recruit and radicalize them.

H2: Mitigating the Risk: Technological and Societal Solutions

Addressing the complex interplay between algorithmic radicalization and mass shootings requires a multi-pronged approach involving technological advancements, governmental regulation, and societal changes.

-

Improved content moderation techniques: More sophisticated algorithms are needed to effectively identify and remove hate speech, conspiracy theories, and other forms of harmful content. This requires ongoing refinement and adaptation to stay ahead of evolving tactics used by extremist groups.

-

Algorithm redesign to prioritize factual information and reduce the spread of harmful content: Algorithms should be designed to prioritize trustworthy sources of information and reduce the amplification of misleading or harmful content. Transparency in algorithmic design is also crucial to allow for independent scrutiny and accountability.

-

Increased media literacy education: Equipping individuals with the critical thinking skills necessary to evaluate online information is essential. Media literacy education can empower users to identify misinformation, biased narratives, and manipulative techniques used by extremist groups.

-

Strengthening community resilience to online extremism: Building strong and resilient communities that actively challenge extremist narratives and promote inclusivity is essential to counter the effects of online radicalization. This requires collaboration between government agencies, civil society organizations, and community leaders.

3. Conclusion

The relationship between algorithmic radicalization and mass shootings is complex and multifaceted. While algorithms are not the sole cause of mass violence, the evidence suggests they play a significant role in facilitating and accelerating the process of radicalization. The amplification of hate speech, the creation of echo chambers, and the ease of online recruitment all contribute to a dangerous environment where extremist ideologies can flourish. Addressing this requires a concerted effort from technology companies, policymakers, educators, and individuals. We must advocate for improved algorithm transparency, enhanced content moderation, increased media literacy, and stronger community resilience to combat the risks posed by algorithmic radicalization and mass shootings. Only through a comprehensive strategy can we hope to mitigate this growing threat and foster safer online spaces.

Featured Posts

-

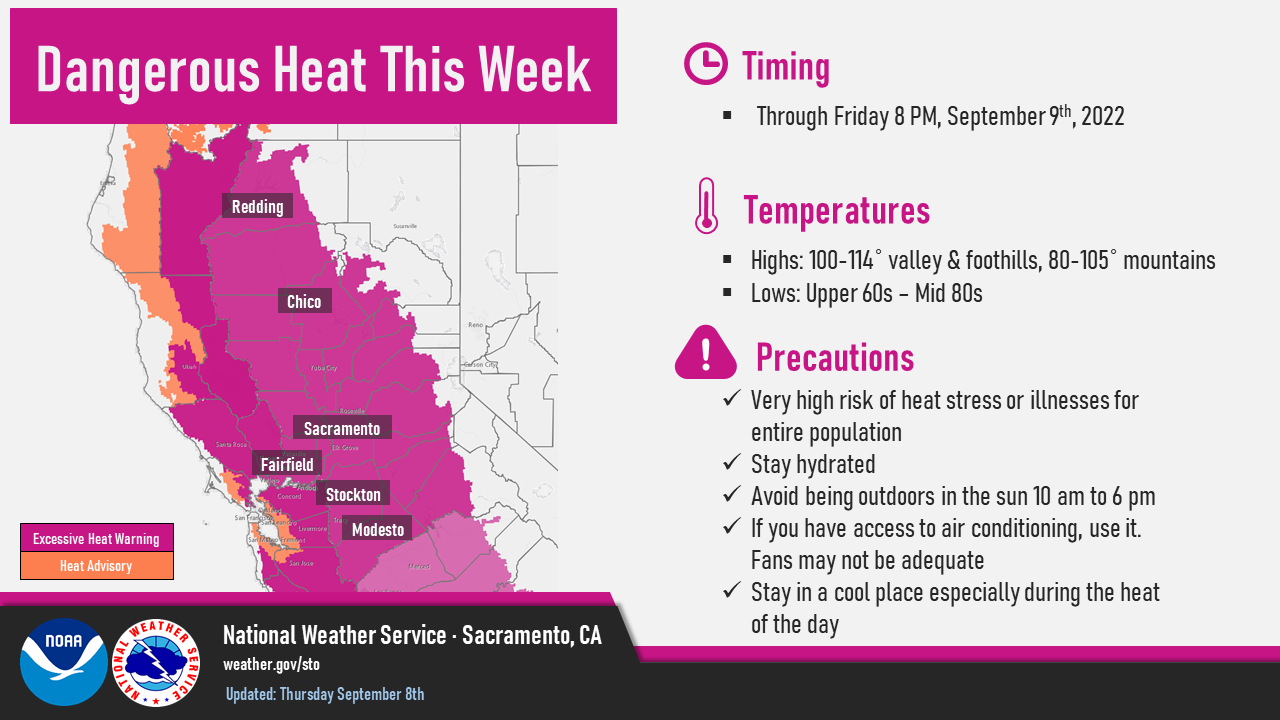

Why Excessive Heat Warnings Are Often Missing From Forecasts

May 30, 2025

Why Excessive Heat Warnings Are Often Missing From Forecasts

May 30, 2025 -

Tennis Governance Under Fire Djokovics Union Initiates Legal Proceedings

May 30, 2025

Tennis Governance Under Fire Djokovics Union Initiates Legal Proceedings

May 30, 2025 -

San Diego Area Faces Flooding After Powerful Late Winter Storm

May 30, 2025

San Diego Area Faces Flooding After Powerful Late Winter Storm

May 30, 2025 -

Gorillaz Celebrate 25 Years A Retrospective Exhibition And Live Performances

May 30, 2025

Gorillaz Celebrate 25 Years A Retrospective Exhibition And Live Performances

May 30, 2025 -

Djokovics Player Union Launches Legal Action Against Tennis Governing Bodies

May 30, 2025

Djokovics Player Union Launches Legal Action Against Tennis Governing Bodies

May 30, 2025