AI Therapy: Surveillance In A Police State?

Table of Contents

The Allure of AI Therapy

AI therapy holds immense promise in addressing the global mental health crisis. Its potential benefits are significant and far-reaching.

Accessibility and Affordability

AI therapy offers solutions to the significant barriers many face in accessing mental healthcare.

- Reduced cost: AI-powered platforms can significantly reduce the cost of therapy compared to traditional in-person sessions, making it more affordable for individuals with limited financial resources.

- 24/7 availability: Unlike human therapists who have limited hours, AI therapy is available around the clock, providing immediate support whenever needed.

- Anonymity: For individuals hesitant to reveal their struggles to a human therapist, the anonymity offered by some AI platforms can be invaluable.

- Overcoming geographical barriers: AI therapy can transcend geographical limitations, offering access to mental healthcare in remote or underserved areas where qualified professionals are scarce.

Personalized Treatment Plans

AI's ability to analyze vast amounts of data allows for the creation of highly personalized treatment plans.

- Tailored interventions: AI can analyze patient data to identify patterns and tailor interventions specific to an individual's needs and preferences.

- Faster identification of appropriate treatments: By analyzing symptoms and responses, AI can help clinicians quickly identify the most effective treatment strategies.

- Improved treatment outcomes: Personalized and data-driven approaches can potentially lead to better outcomes and faster recovery times for patients.

The Dark Side: Surveillance and Privacy Concerns

Despite the potential benefits, the use of AI in therapy raises serious ethical and privacy concerns. The potential for misuse is substantial.

Data Collection and Security

AI therapy platforms collect vast amounts of sensitive personal data, including intimate details about a patient's thoughts, feelings, and behaviors.

- Storage of sensitive information: The storage and security of this data are paramount. Data breaches could expose highly personal and potentially damaging information.

- Potential for hacking: AI therapy platforms are potential targets for hackers seeking to exploit vulnerabilities and access sensitive data.

- Vulnerability to misuse by third parties: There is a risk that data could be misused by third parties, including insurance companies, employers, or even government agencies.

- Lack of transparency in data handling: A lack of transparency regarding data handling practices can erode trust and raise concerns about potential misuse.

Algorithmic Bias and Discrimination

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithm will perpetuate those biases.

- Bias based on race, gender, socioeconomic status: AI systems may exhibit biases based on demographic factors, leading to misdiagnosis or inappropriate treatment recommendations for certain groups.

- Potential for misdiagnosis or inappropriate treatment recommendations: Biased algorithms could lead to inaccurate diagnoses and ineffective or even harmful treatment recommendations.

Erosion of Confidentiality and Trust

The potential for government or corporate surveillance through AI therapy could fundamentally undermine the doctor-patient relationship.

- Reduced willingness to disclose sensitive information: Individuals may be less willing to share sensitive information if they fear it could be accessed by unauthorized parties.

- Impact on therapeutic alliance: The erosion of trust and confidentiality can severely damage the therapeutic alliance, making it more difficult for patients to benefit from therapy.

- Chilling effect on open communication: Fear of surveillance can create a chilling effect, preventing open and honest communication, which is crucial for effective treatment.

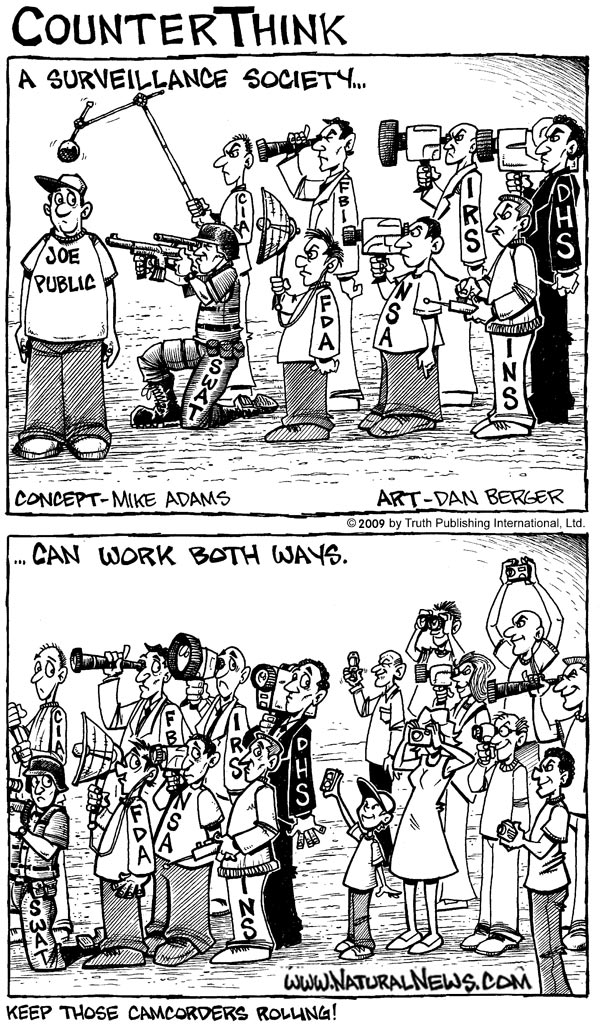

AI Therapy in a Police State Scenario

The potential for abuse of AI therapy data in authoritarian regimes is particularly alarming.

Potential for Abuse by Authoritarian Regimes

In a police state, AI therapy data could be used as a tool of oppression and control.

- Predictive policing based on mental health data: Governments might use data to identify individuals deemed “at risk” and subject them to increased surveillance or preemptive arrests.

- Preemptive arrests: Individuals expressing dissent or exhibiting signs of mental distress could be targeted for preemptive arrest based on AI-generated risk assessments.

- Profiling based on therapy content: The content of therapy sessions could be analyzed to identify and target political opponents or individuals deemed “unstable.”

The Thin Line Between Treatment and Control

The use of AI in therapy blurs the line between therapeutic intervention and state control.

- Forced treatment: Governments might mandate AI-based therapy as a form of control or punishment.

- Manipulation of therapy content: Authoritarian regimes might manipulate AI algorithms to subtly influence or control the thoughts and behaviors of individuals.

- Involuntary commitment based on AI-generated assessments: AI-generated assessments could be used to justify involuntary commitment to mental health facilities.

Conclusion

AI therapy offers the potential to revolutionize mental healthcare, making it more accessible and effective. However, the ethical and privacy implications, especially the potential for misuse in a surveillance state, cannot be ignored. The responsible development and implementation of AI therapy require robust regulations, transparent data handling practices, and a commitment to protecting individual rights and freedoms. We must proactively address algorithmic bias and ensure the security of sensitive patient data. The future of AI therapy depends on our collective commitment to harnessing its potential for good while preventing it from becoming a tool of oppression. We must advocate for ethical AI therapy development and responsible AI therapy implementation to ensure that this powerful technology serves humanity, not suppresses it. The future of mental healthcare and individual liberties hinges on our ability to navigate the complexities of AI therapy responsibly.

Featured Posts

-

Rekord Leme Po Golam V Pley Off N Kh L Pobit Ovechkinym

May 15, 2025

Rekord Leme Po Golam V Pley Off N Kh L Pobit Ovechkinym

May 15, 2025 -

Jimmy Butlers Bigface Discount For Warriors Employees

May 15, 2025

Jimmy Butlers Bigface Discount For Warriors Employees

May 15, 2025 -

Rethinking Middle Management Their Crucial Contribution To Organizational Growth

May 15, 2025

Rethinking Middle Management Their Crucial Contribution To Organizational Growth

May 15, 2025 -

Former Goldman Sachs Banker Answers Carneys Call To Reform Canadas Resources

May 15, 2025

Former Goldman Sachs Banker Answers Carneys Call To Reform Canadas Resources

May 15, 2025 -

Jm Financial Invest In Baazar Style Retail For R400

May 15, 2025

Jm Financial Invest In Baazar Style Retail For R400

May 15, 2025

Latest Posts

-

Jd Vances Perfect Rebuttal Countering Bidens Ukraine Criticism

May 15, 2025

Jd Vances Perfect Rebuttal Countering Bidens Ukraine Criticism

May 15, 2025 -

Bidens Lack Of Comment On Trumps Russia Ukraine Policies Vances Concerns

May 15, 2025

Bidens Lack Of Comment On Trumps Russia Ukraine Policies Vances Concerns

May 15, 2025 -

Senator Vance Questions Bidens Stance On Trumps Russia Ukraine Record

May 15, 2025

Senator Vance Questions Bidens Stance On Trumps Russia Ukraine Record

May 15, 2025 -

Tramp I Negovite Napadi Vrz Mediumite I Sudstvoto

May 15, 2025

Tramp I Negovite Napadi Vrz Mediumite I Sudstvoto

May 15, 2025 -

Na Avena Chistka Vo Sudstvoto Po Napadite Na Tramp Vrz Mediumite

May 15, 2025

Na Avena Chistka Vo Sudstvoto Po Napadite Na Tramp Vrz Mediumite

May 15, 2025