The Surveillance Risks Of AI Therapy In A Police State

Table of Contents

H2: Data Collection and Privacy Violations in AI Therapy

The seemingly benign act of utilizing AI therapy applications involves a surprisingly extensive collection of personal data. This creates a significant vulnerability, especially in environments lacking robust data protection laws.

H3: The Extensive Data Footprint of AI Therapy

AI therapy platforms gather a wealth of sensitive information, creating a comprehensive digital profile of each user. This data footprint includes:

- Voice recordings: Detailed recordings of therapy sessions, capturing personal thoughts, anxieties, and vulnerabilities.

- Text transcripts: Written records of interactions, providing further insight into the user's mental state and personal life.

- Biometric data: Data from wearable devices or built-in sensors tracking heart rate, sleep patterns, and other physiological indicators, potentially revealing emotional states.

- Location data: GPS data linked to therapy sessions, revealing the user's movements and potentially sensitive locations.

The lack of transparency regarding data usage and storage policies exacerbates these privacy risks. Users often lack clear understanding of where their data is stored, how long it is retained, and with whom it may be shared.

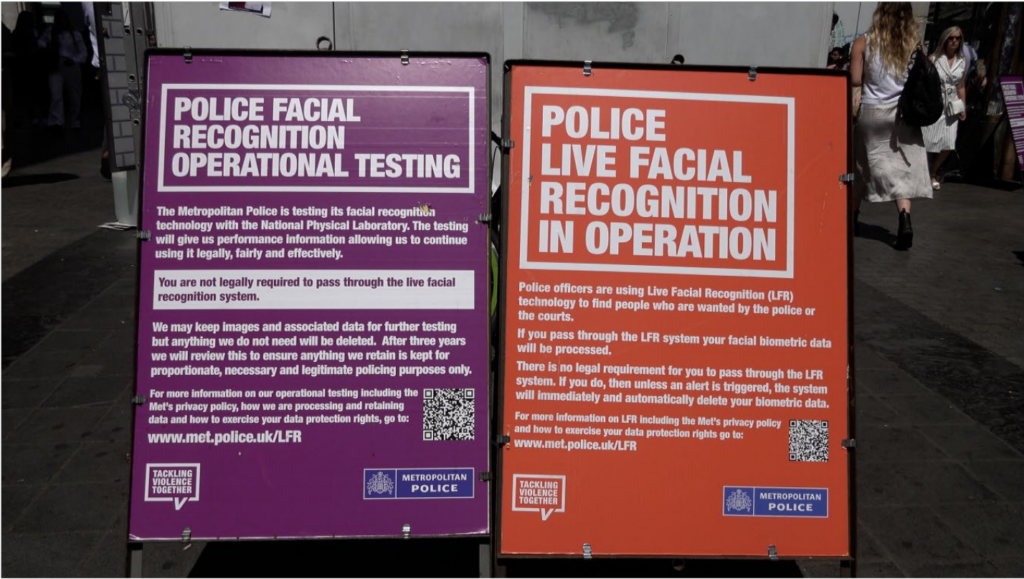

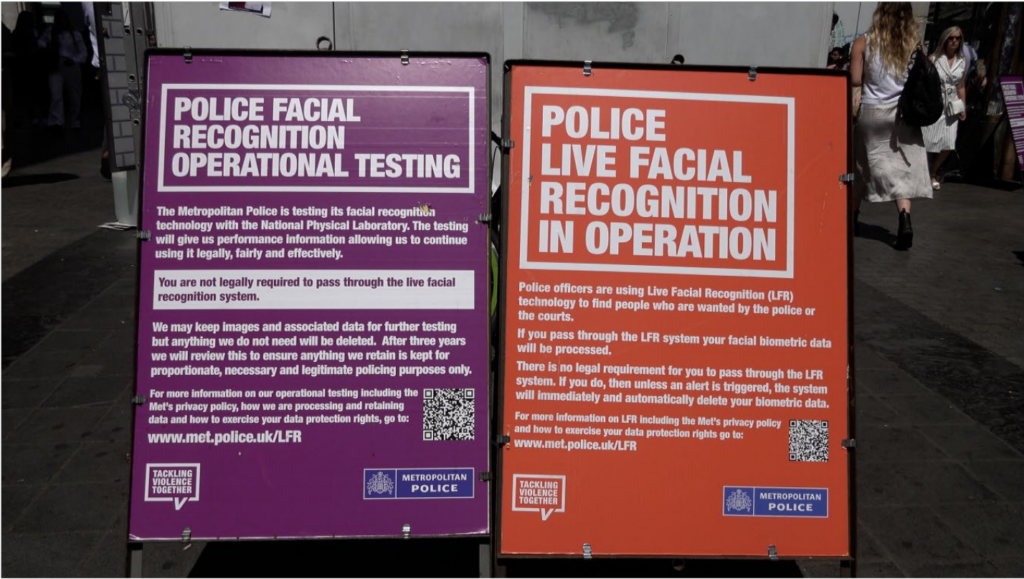

H3: The Potential for Government Surveillance and Data Breaches

In a police state, this vast trove of personal information becomes a potent tool for surveillance and repression. Government access to AI therapy data allows for:

- Identification of dissidents: Monitoring conversations for expressions of dissent, political opposition, or criticism of the regime.

- Behavioral prediction and profiling: Using data to predict and preemptively target individuals deemed potential threats to the state.

- Repression of political activity: Tracking individuals involved in protests, organizing, or activism.

Furthermore, the potential for data breaches is significant. Lack of robust security measures, coupled with the sensitivity of the data collected, leaves users vulnerable to hacking and unauthorized access, with potentially devastating consequences for their privacy and safety.

H2: Algorithmic Bias and Discrimination in AI Therapy

The algorithms powering AI therapy systems are not immune to bias. These biases, often stemming from skewed training data, can lead to discriminatory outcomes with serious implications.

H3: Biases Embedded in AI Algorithms

Algorithmic bias can manifest in various ways:

- Misdiagnosis: AI systems trained on biased data may misinterpret symptoms, leading to inaccurate diagnoses and inappropriate treatment recommendations.

- Discriminatory treatment recommendations: Bias can lead to unequal access to effective treatments, disproportionately affecting certain demographic groups.

The difficulty in detecting and mitigating these biases is a significant challenge, particularly in the absence of transparent and accountable AI development processes.

H3: The Use of AI Therapy to Identify and Target Dissidents

In a police state, AI therapy presents a particularly insidious threat: its potential use to identify and target individuals expressing dissent.

- Political profiling: The system could flag individuals expressing critical views as potential threats, leading to surveillance, harassment, or imprisonment.

- Chilling effect on freedom of expression: The mere knowledge that their therapy sessions are being monitored could deter individuals from seeking help or expressing their true feelings. This effect directly threatens mental health by preventing open and honest communication vital for treatment.

This represents a deeply concerning intersection of mental healthcare and political repression.

H2: Lack of Regulation and Accountability in AI Therapy

The absence of strong regulatory frameworks governing the use of AI in mental healthcare is a major concern, particularly in authoritarian contexts.

H3: The Regulatory Vacuum Surrounding AI Therapy

The current landscape lacks sufficient oversight and accountability mechanisms:

- Lack of data protection laws: Weak or non-existent data protection laws fail to adequately safeguard sensitive personal information generated by AI therapy platforms.

- Absence of international standards: The need for international cooperation to establish ethical guidelines and standards for the development and deployment of AI therapy is critical.

This regulatory vacuum leaves users vulnerable to exploitation and abuse.

H3: The Ethical Implications of Using AI Therapy in Police States

Using AI therapy in police states raises serious ethical concerns:

- Lack of informed consent: Individuals may not have the freedom or ability to provide genuine informed consent for data collection and analysis.

- Potential for coercion: Individuals may feel pressured to use AI therapy services, especially if access to traditional mental healthcare is restricted or controlled by the state.

These ethical violations undermine the fundamental principles of patient autonomy and the right to privacy.

3. Conclusion

The surveillance risks of AI therapy in a police state are substantial, threatening fundamental rights, privacy, and mental well-being. The extensive data collection, potential for algorithmic bias, and the lack of regulation create a dangerous combination that enables abuse and repression. To mitigate these risks, we urgently need stronger regulations, robust data protection measures, ethical guidelines, and transparent development processes for AI therapy applications. We must advocate for greater accountability and international cooperation to prevent the misuse of this technology in oppressive environments. The future of AI therapy must prioritize individual rights and freedoms, ensuring it serves as a tool for healing rather than oppression. Let's continue the conversation and demand responsible development and implementation of AI therapy, safeguarding it against the dangers outlined here.

Featured Posts

-

Former Trump Officials Dispute Robert F Kennedy Jr S Pesticide Attacks

May 15, 2025

Former Trump Officials Dispute Robert F Kennedy Jr S Pesticide Attacks

May 15, 2025 -

Giants Vs Padres Prediction Outright Padres Win Or A 1 Run Loss

May 15, 2025

Giants Vs Padres Prediction Outright Padres Win Or A 1 Run Loss

May 15, 2025 -

Muncy Breaks Long Slump 2025s First Home Run

May 15, 2025

Muncy Breaks Long Slump 2025s First Home Run

May 15, 2025 -

Creatine Supplements Everything You Need To Know

May 15, 2025

Creatine Supplements Everything You Need To Know

May 15, 2025 -

Novakove Patike Pregled Modela Od 1 500 Evra I Alternative

May 15, 2025

Novakove Patike Pregled Modela Od 1 500 Evra I Alternative

May 15, 2025

Latest Posts

-

Berlin Bvg Im Ausstand Aktuelle Informationen Und Fahrplanausfaelle

May 15, 2025

Berlin Bvg Im Ausstand Aktuelle Informationen Und Fahrplanausfaelle

May 15, 2025 -

The Role Of Jeremy Arndt In Bvg Talks A Comprehensive Overview

May 15, 2025

The Role Of Jeremy Arndt In Bvg Talks A Comprehensive Overview

May 15, 2025 -

Tarifkonflikt Bvg Beigelegt Schlichtung Verhindert Streik Zu Ostern

May 15, 2025

Tarifkonflikt Bvg Beigelegt Schlichtung Verhindert Streik Zu Ostern

May 15, 2025 -

Analyzing The Negotiator Jeremy Arndts Impact On Bvg Discussions

May 15, 2025

Analyzing The Negotiator Jeremy Arndts Impact On Bvg Discussions

May 15, 2025 -

Understanding Jeremy Arndts Negotiation Role In Bvg Talks

May 15, 2025

Understanding Jeremy Arndts Negotiation Role In Bvg Talks

May 15, 2025