The Reality Of AI Learning: Guiding Principles For Responsible AI Use

Table of Contents

Understanding the Limitations of Current AI Learning

Current AI learning systems, while powerful, are not without limitations. Understanding these limitations is paramount for responsible AI development.

Data Bias and its Impact

AI models are trained on data, and if that data is biased, the resulting AI system will inevitably reflect those biases. This can lead to discriminatory outcomes and perpetuate societal inequalities.

-

Examples of biased datasets and their consequences:

- Facial recognition systems showing higher error rates for people of color.

- Algorithmic bias in loan applications leading to discriminatory lending practices.

- Recruitment AI favoring candidates with specific demographics over others.

-

Mitigation strategies:

- Data augmentation: Adding more representative data to balance existing biases.

- Careful data selection: Rigorously auditing datasets for biases before training.

- Algorithmic fairness techniques: Employing algorithms designed to mitigate bias in decision-making.

The "Black Box" Problem and Explainability

Many advanced AI models, particularly deep learning systems, are often described as "black boxes." Their internal workings are opaque, making it difficult to understand how they arrive at their decisions. This lack of transparency poses significant challenges in areas where explainability is crucial.

-

Examples where explainability is crucial:

- Medical diagnosis: Understanding why an AI system made a particular diagnosis is vital for patient care.

- Criminal justice: Transparency in AI-driven risk assessment tools is essential for fairness and due process.

- Financial services: Explaining credit scoring decisions helps prevent discrimination.

-

Techniques aiming to improve explainability:

- LIME (Local Interpretable Model-agnostic Explanations): Provides local explanations for individual predictions.

- SHAP (SHapley Additive exPlanations): Assigns importance scores to features based on game theory.

- Feature importance analysis: Identifies the most influential features in the model's decision-making process.

Generalization and Robustness Challenges

AI systems often struggle to generalize their learning to unseen data or unexpected situations. This lack of robustness can lead to significant failures in real-world applications.

-

Examples of AI failures due to lack of robustness:

- Self-driving cars failing in unusual weather conditions or encountering unexpected obstacles.

- Medical image analysis systems misclassifying images due to variations in lighting or image quality.

- Spam filters incorrectly flagging legitimate emails.

-

Approaches for improving robustness:

- Adversarial training: Training the AI model on deliberately perturbed data to improve its resilience.

- Data augmentation: Expanding the training data to include a wider range of scenarios and variations.

- Ensemble methods: Combining multiple AI models to improve overall robustness and accuracy.

Ethical Considerations in AI Learning

The development and deployment of AI systems raise significant ethical concerns that must be addressed proactively.

Privacy and Data Security

AI learning relies heavily on data, often involving sensitive personal information. Protecting user privacy and ensuring data security are paramount.

- Data anonymization techniques: Removing or masking identifying information from datasets.

- Differential privacy: Adding noise to datasets to protect individual privacy while preserving statistical utility.

- Compliance with data protection regulations: Adhering to regulations like GDPR and CCPA.

Accountability and Transparency

Clear lines of responsibility must be established for the development and deployment of AI systems. Transparency in AI algorithms and their decision-making processes is also crucial.

- Establishing clear roles and responsibilities: Defining who is accountable for the AI system's actions and outcomes.

- Implementing auditing mechanisms: Regularly assessing AI systems for bias, fairness, and compliance.

- Promoting transparency in AI algorithms: Making the underlying algorithms and data used in AI systems more accessible and understandable.

Bias Mitigation and Fairness

Addressing bias and promoting fairness in AI systems is a crucial ethical imperative. This requires ongoing effort and a commitment to creating equitable AI solutions.

- Techniques for mitigating bias:

- Fairness-aware algorithms: Algorithms explicitly designed to minimize bias in their outputs.

- Re-weighting data: Adjusting the importance of different data points to compensate for biases in the dataset.

- Importance of diverse teams in AI development: Diverse teams bring different perspectives and can help identify and mitigate bias.

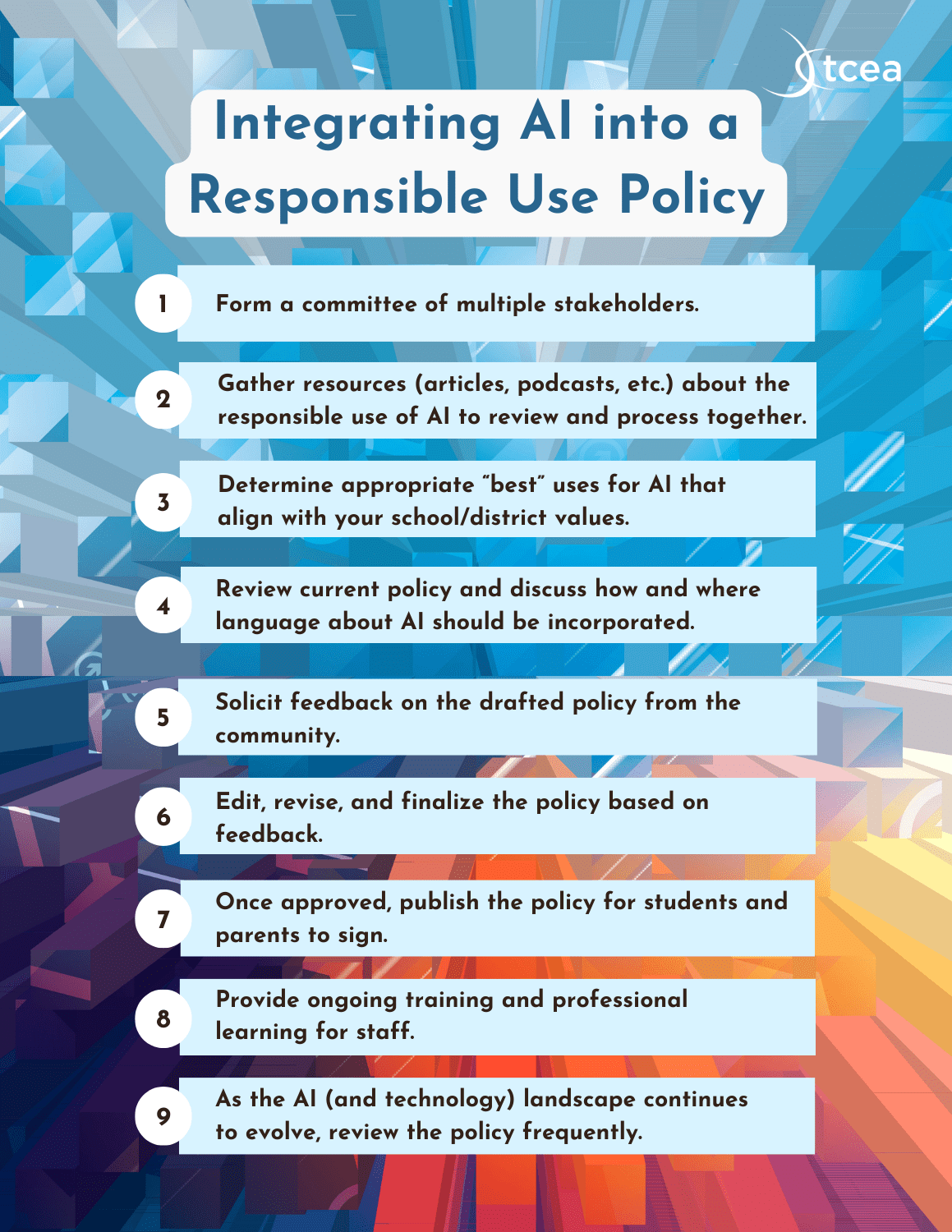

Guiding Principles for Responsible AI Learning

To ensure the responsible development and use of AI, several guiding principles should be adopted.

Human Oversight and Control

Human intervention should be maintained in critical decision-making processes involving AI, especially in high-stakes applications.

- Establishing clear protocols for human review: Defining when and how human experts should review AI decisions.

- Ensuring human-in-the-loop systems: Designing AI systems that allow for human intervention and control throughout the process.

Continuous Monitoring and Evaluation

AI systems require ongoing monitoring and evaluation to ensure their performance, identify potential biases, and maintain ethical compliance.

- Implementing robust monitoring systems: Tracking AI system performance, identifying errors, and detecting biases.

- Conducting regular audits: Periodically reviewing AI systems for compliance with ethical guidelines and regulatory requirements.

- Adapting AI systems based on feedback: Continuously improving AI systems based on performance data and user feedback.

Education and Awareness

Promoting education and public awareness about the capabilities and limitations of AI is crucial for responsible AI development and adoption.

- Promoting AI literacy: Educating the public about AI technologies, their potential benefits, and their limitations.

- Fostering public dialogue about ethical considerations of AI: Creating opportunities for open discussions about the ethical implications of AI.

Conclusion

The realities of AI learning encompass both its immense potential and its inherent limitations. Responsible AI development requires a keen understanding of these limitations, a commitment to ethical considerations, and the implementation of robust guiding principles. Addressing data bias, ensuring transparency and accountability, and prioritizing human oversight are essential for creating AI systems that are both effective and beneficial to society. Let's work together to embrace the possibilities of AI learning while mitigating its risks and actively contributing to the creation of a more equitable and beneficial AI future. By adopting responsible AI learning practices and upholding the guiding principles for AI, we can harness the power of AI for good, shaping a future where AI serves humanity ethically and effectively.

Featured Posts

-

The Transgender High School Student Context Of Trumps Funding Decision

May 31, 2025

The Transgender High School Student Context Of Trumps Funding Decision

May 31, 2025 -

Harvards Foreign Student Ban Reprieve Extended By Court Ruling

May 31, 2025

Harvards Foreign Student Ban Reprieve Extended By Court Ruling

May 31, 2025 -

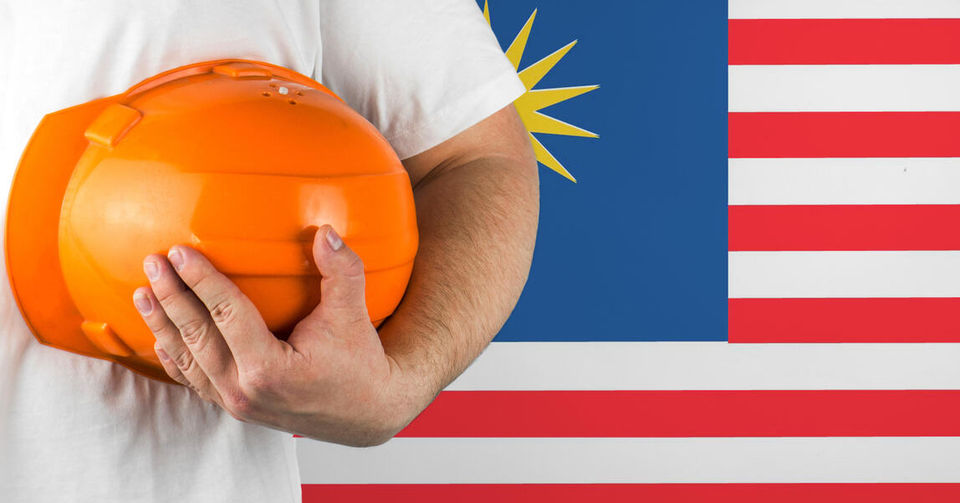

Saturday May 3rd Nyt Mini Crossword Solutions

May 31, 2025

Saturday May 3rd Nyt Mini Crossword Solutions

May 31, 2025 -

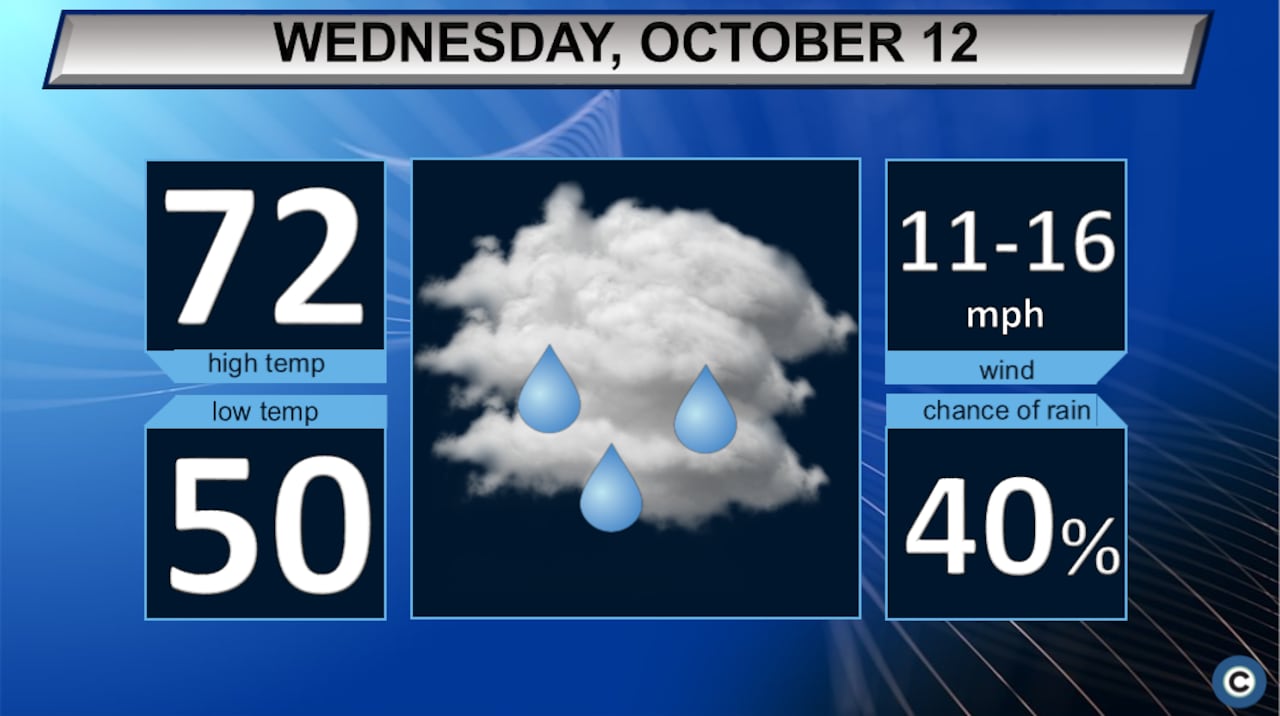

Northeast Ohio Weather Forecast Thursday Rain

May 31, 2025

Northeast Ohio Weather Forecast Thursday Rain

May 31, 2025 -

Munguia Vs Surace Ii A Closer Look At The Winning Adjustments

May 31, 2025

Munguia Vs Surace Ii A Closer Look At The Winning Adjustments

May 31, 2025