The Potential For Surveillance: Examining The Risks Of AI In Mental Healthcare

Table of Contents

Data Privacy and Security Concerns in AI-Powered Mental Healthcare

The use of AI in mental healthcare involves the collection and analysis of highly sensitive patient data, making data privacy and security paramount. A breach of this data could have devastating consequences for individuals.

Data breaches and unauthorized access

AI systems, like any technology, are vulnerable to cyberattacks and data breaches. The sensitive nature of mental health information makes it a particularly lucrative target for malicious actors.

- Examples of data breaches in healthcare: Recent years have witnessed numerous high-profile data breaches affecting healthcare providers, exposing patient medical records, including mental health diagnoses and treatment plans.

- The potential for misuse of mental health data: Stolen mental health data can be used for identity theft, blackmail, or even to discriminate against individuals in employment or insurance.

- The lack of robust security measures in some AI systems: Not all AI systems used in mental healthcare incorporate the highest security standards, leaving patient data vulnerable.

The severity of a breach concerning mental health information is amplified by the potential for long-term damage to reputation, relationships, and employment prospects. The stigmatization associated with mental illness exacerbates the potential harm caused by such breaches.

Algorithmic bias and discrimination

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithms will perpetuate and even amplify those biases. This is particularly concerning in mental healthcare, where biases can lead to misdiagnosis, inappropriate treatment, and unequal access to care.

- Examples of biased algorithms: Studies have shown that AI systems used in medical diagnosis can exhibit bias based on race, gender, or socioeconomic status. Similar biases could emerge in mental health AI.

- How they can lead to misdiagnosis or unequal treatment: A biased algorithm might misinterpret symptoms or prioritize certain diagnoses over others, potentially leading to inaccurate diagnoses and inappropriate treatment plans. This could disproportionately affect marginalized communities.

- The importance of diverse and representative datasets: To mitigate algorithmic bias, it's crucial to train AI systems on diverse and representative datasets that accurately reflect the population being served.

Bias can manifest at various stages, from data collection and algorithm design to the interpretation of results. Addressing algorithmic bias requires careful attention to data quality, algorithm transparency, and ongoing monitoring for discriminatory outcomes.

The Erosion of Patient Autonomy and Therapeutic Trust in AI-Driven Mental Health Platforms

The increasing reliance on AI in mental healthcare raises concerns about the erosion of patient autonomy and the potential damage to the therapeutic relationship.

Constant monitoring and surveillance

AI systems capable of tracking patient behavior, such as through wearable sensors or app usage, can create a sense of constant surveillance. This can undermine the trust and open communication essential for effective therapy.

- Examples of AI systems that track patient behavior: Some apps monitor sleep patterns, mood fluctuations, and activity levels, potentially feeding data into an AI algorithm for analysis.

- The potential for feeling judged or monitored: Patients may feel hesitant to disclose sensitive information if they believe they are constantly being monitored and judged by an AI system.

- Impact on open communication between patient and therapist: Constant monitoring can hinder the development of a trusting therapeutic relationship, making it difficult for patients to openly share their thoughts and feelings.

Constant monitoring may stifle self-disclosure, a vital aspect of successful therapy. Patients need to feel safe and comfortable to explore sensitive issues without fear of judgment or reprisal.

Lack of human interaction and empathy

While AI can assist in mental healthcare, it cannot replace the human connection and empathy crucial for effective treatment. The emotional nuances of mental health require the understanding and support that only a human therapist can provide.

- Importance of human empathy in mental health: Empathy is a cornerstone of effective mental health treatment, fostering a sense of understanding, validation, and hope.

- The limitations of AI in understanding nuanced emotional cues: AI systems struggle to interpret the complexities of human emotion, potentially missing subtle cues that are critical in diagnosis and treatment.

- The risk of dehumanizing the therapeutic process: Over-reliance on AI could dehumanize the therapeutic process, reducing patients to data points rather than individuals with unique needs and experiences.

The potential for increased feelings of isolation and loneliness, despite using AI-powered tools, is a significant concern. Human interaction remains indispensable in addressing the emotional and relational aspects of mental health challenges.

Regulatory and Ethical Frameworks for AI in Mental Healthcare

The rapid integration of AI into mental healthcare highlights the urgent need for robust regulatory frameworks and ethical guidelines.

The need for stricter regulations

Current regulations often lag behind technological advancements, creating a regulatory gap that needs to be addressed to protect patient privacy and ensure the ethical development and use of AI in mental healthcare.

- Current regulatory gaps in AI and healthcare: Many jurisdictions lack specific regulations addressing the use of AI in mental healthcare, leading to inconsistencies and potential loopholes.

- Examples of existing regulations that could be adapted: Regulations related to data privacy (e.g., GDPR, HIPAA) and medical device safety could be adapted and expanded to cover AI systems used in mental healthcare.

- Calls for international collaboration: International collaboration is essential to establish consistent standards and guidelines for the ethical development and use of AI in mental healthcare globally.

Specific regulatory areas demanding attention include data ownership, algorithm transparency (explainable AI), accountability for AI-driven decisions, and mechanisms for redress in case of harm.

Ethical considerations and responsible innovation

Ethical considerations should guide the design, development, and implementation of AI systems in mental healthcare. This includes adhering to established ethical principles and involving patients and stakeholders in the development process.

- Principles of beneficence, non-maleficence, autonomy, and justice: These four core ethical principles should underpin all aspects of AI development and deployment in mental healthcare.

- Importance of involving patients and stakeholders in the development process: Patient participation is crucial to ensure that AI systems are developed and used in ways that respect their rights and preferences.

Concrete examples of ethical considerations include obtaining informed consent for data collection and use, ensuring algorithm transparency, and establishing clear protocols for handling sensitive information.

Conclusion

While AI offers promising advancements in mental healthcare, the potential for surveillance and the erosion of patient privacy necessitate careful consideration. The ethical implications, data security concerns, and the need for robust regulatory frameworks cannot be overlooked. Moving forward, responsible innovation and a patient-centered approach are crucial to harnessing the benefits of AI in mental healthcare while mitigating its inherent risks. We must prioritize ethical guidelines and regulations to ensure that the use of AI in mental healthcare remains a force for good, respecting patient autonomy and promoting genuine therapeutic relationships. Continued discussion and critical evaluation of the AI in mental healthcare landscape are vital to shaping a future where technology enhances, rather than undermines, the well-being of individuals.

Featured Posts

-

La Ligas Piracy Block Vercel Challenges Internet Censorship

May 16, 2025

La Ligas Piracy Block Vercel Challenges Internet Censorship

May 16, 2025 -

United Healths Hemsley Can A Boomerang Ceo Deliver

May 16, 2025

United Healths Hemsley Can A Boomerang Ceo Deliver

May 16, 2025 -

Sigue El Encuentro Venezia Napoles En Directo

May 16, 2025

Sigue El Encuentro Venezia Napoles En Directo

May 16, 2025 -

Gambling On Calamity The Troubling Trend Of Betting On The Los Angeles Wildfires

May 16, 2025

Gambling On Calamity The Troubling Trend Of Betting On The Los Angeles Wildfires

May 16, 2025 -

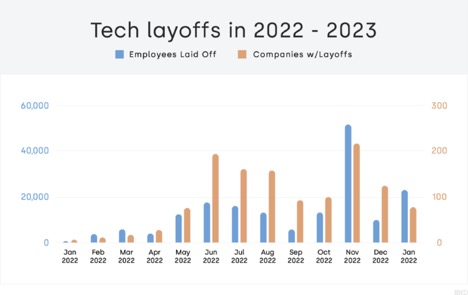

Microsoft Layoffs Over 6 000 Employees Affected

May 16, 2025

Microsoft Layoffs Over 6 000 Employees Affected

May 16, 2025

Latest Posts

-

103 X Vont Weekend A Visual Summary April 4 6 2025

May 16, 2025

103 X Vont Weekend A Visual Summary April 4 6 2025

May 16, 2025 -

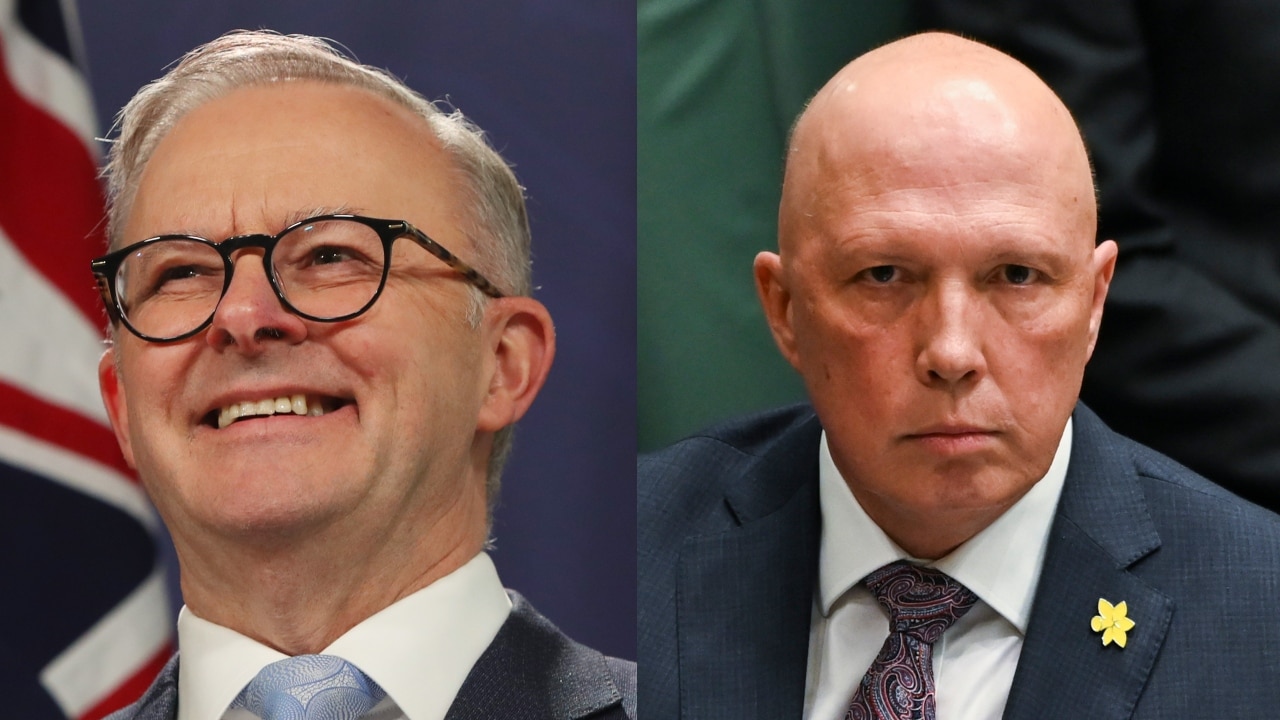

Albanese And Dutton Face Off Dissecting Their Key Policy Proposals

May 16, 2025

Albanese And Dutton Face Off Dissecting Their Key Policy Proposals

May 16, 2025 -

Conquer May 8th Mlb Dfs Expert Sleeper Picks And Hitter To Fade

May 16, 2025

Conquer May 8th Mlb Dfs Expert Sleeper Picks And Hitter To Fade

May 16, 2025 -

Vont Weekend Photo Recap April 4 6 2025 103 X

May 16, 2025

Vont Weekend Photo Recap April 4 6 2025 103 X

May 16, 2025 -

Best Mlb Dfs Picks For May 8th Sleepers Value And One To Avoid

May 16, 2025

Best Mlb Dfs Picks For May 8th Sleepers Value And One To Avoid

May 16, 2025