The Dark Side Of AI Therapy: Surveillance And State Control

Table of Contents

Data Collection and Privacy Concerns in AI Therapy

The increasing use of AI in mental healthcare raises significant concerns about data collection and privacy. AI therapy platforms collect extensive data, creating a detailed profile of users' mental states. This raises significant ethical and practical questions about data security and potential misuse.

The Scope of Data Collection

AI therapy apps gather a surprisingly broad range of data, often without sufficient transparency. This data can be incredibly sensitive and revealing of an individual's vulnerabilities.

- Voice recordings: Every session is recorded, capturing not just the content of conversations but also vocal inflections, pauses, and other nuances revealing emotional states.

- Text messages: Every typed word, including personal thoughts, fears, and self-disclosures, is stored and analyzed.

- Biometric data: Some apps track heart rate, sleep patterns, and other physiological data, potentially revealing further insights into mental health.

- Location data: GPS information can track user movements, potentially compromising location privacy.

This extensive data collection lacks transparency in many cases. Users are often unaware of the full extent of data collected or how it's utilized. The potential for misuse is significant, with unauthorized access leading to identity theft, blackmail, or social stigmatization. Data breaches, a constant threat in the digital age, pose an even graver risk to the confidentiality of sensitive mental health information.

Lack of Data Security and Encryption

The vulnerability of AI systems to hacking and data breaches is a serious concern. Mental health data is exceptionally sensitive; its unauthorized disclosure can have devastating consequences for individuals.

- Past data breaches in healthcare settings have demonstrated the catastrophic consequences of insecure data handling, with personal information, including mental health records, being exposed to malicious actors.

- Robust encryption and stringent security protocols are essential to protect this sensitive information, yet these are not consistently implemented across all AI therapy platforms.

Developers and providers of AI therapy platforms have a legal and ethical responsibility to implement rigorous data security measures. Failure to do so exposes vulnerable individuals to potentially life-altering risks.

AI Therapy and State Surveillance

The potential for government access to sensitive data collected through AI therapy platforms is chilling. This raises serious concerns about state surveillance and the erosion of civil liberties.

Potential for Government Access to Sensitive Data

Governments could exploit AI therapy data for surveillance purposes, particularly targeting individuals deemed a threat to national security or those expressing dissenting opinions.

- The lack of robust regulations governing government access to this data creates a significant vulnerability.

- Scenarios of targeted monitoring of political dissidents or individuals expressing controversial viewpoints are plausible. This raises troubling questions about the balance between national security and individual rights.

The ethical implications of government surveillance of mental health data are profound. The potential for misuse to suppress dissent or unfairly target marginalized communities is a serious concern that necessitates immediate attention.

Bias and Discrimination in AI Algorithms

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithms will inevitably perpetuate and amplify those biases. This is particularly dangerous in mental health care.

- Algorithms might misdiagnose or unfairly label individuals from marginalized communities.

- Bias in AI systems can lead to discriminatory practices, exacerbating existing inequalities in access to care.

The need for unbiased and transparent algorithms is paramount. Addressing bias in AI requires careful data curation and algorithmic design, ensuring fairness and equity in the provision of mental health services.

The Erosion of Patient Autonomy and Confidentiality

The integration of AI in mental health raises serious concerns about the erosion of patient autonomy and confidentiality.

The Impact of Algorithmic Decision-Making

AI systems have the potential to override clinical judgment and infringe on patient autonomy.

- Algorithmic decision-making might lead to inappropriate interventions or misdiagnoses, overriding the professional judgment of mental health practitioners.

- Over-reliance on AI could diminish the therapeutic relationship between patient and therapist, undermining the human element crucial to effective mental health care.

Maintaining human oversight in AI-assisted therapy is essential to safeguard patient autonomy and ensure ethical treatment.

The Challenges to Maintaining Confidentiality

Ensuring the confidentiality of sensitive mental health information when using AI therapy platforms presents significant challenges.

- Data sharing with third-party companies is common, blurring the lines of confidentiality and raising serious privacy concerns.

- The lack of clear guidelines regarding data privacy creates further vulnerabilities.

Strict regulations and ethical guidelines are crucial to protect patient confidentiality in the age of AI-powered mental health care.

Conclusion

The increasing use of AI in mental health offers potential benefits, but it also presents serious threats to privacy, autonomy, and equity. The potential for AI therapy surveillance and state control necessitates a critical evaluation of the technology's implications. We must prioritize patient well-being and privacy above all else. The unchecked expansion of AI in mental healthcare risks creating a system that surveils and controls individuals rather than providing effective treatment. We need stronger regulations, greater transparency, and robust ethical guidelines to ensure that AI is used responsibly and ethically in mental health care. Join the conversation about responsible AI therapy and help shape a future where technology enhances, not endangers, mental health care. Let's advocate for responsible development and deployment of AI in mental healthcare to protect our fundamental rights and freedoms.

Featured Posts

-

Rockies Aim To Snap 7 Game Losing Streak Against Padres

May 15, 2025

Rockies Aim To Snap 7 Game Losing Streak Against Padres

May 15, 2025 -

Hokejove Ms Svedove S 18 Hvezdami Nhl Nemci Jen Se Tremi Velky Rozdil V Kadru

May 15, 2025

Hokejove Ms Svedove S 18 Hvezdami Nhl Nemci Jen Se Tremi Velky Rozdil V Kadru

May 15, 2025 -

Dodgers Freeman Ohtani Hit Homers In Repeat Win Against Marlins

May 15, 2025

Dodgers Freeman Ohtani Hit Homers In Repeat Win Against Marlins

May 15, 2025 -

Official Dodgers Call Up Infielder Hyeseong Kim Report

May 15, 2025

Official Dodgers Call Up Infielder Hyeseong Kim Report

May 15, 2025 -

Game 6 Betting Jimmy Butlers Picks And Analysis Rockets Vs Warriors

May 15, 2025

Game 6 Betting Jimmy Butlers Picks And Analysis Rockets Vs Warriors

May 15, 2025

Latest Posts

-

Ndax Becomes Official Partner Of The Nhl Playoffs In Canada

May 15, 2025

Ndax Becomes Official Partner Of The Nhl Playoffs In Canada

May 15, 2025 -

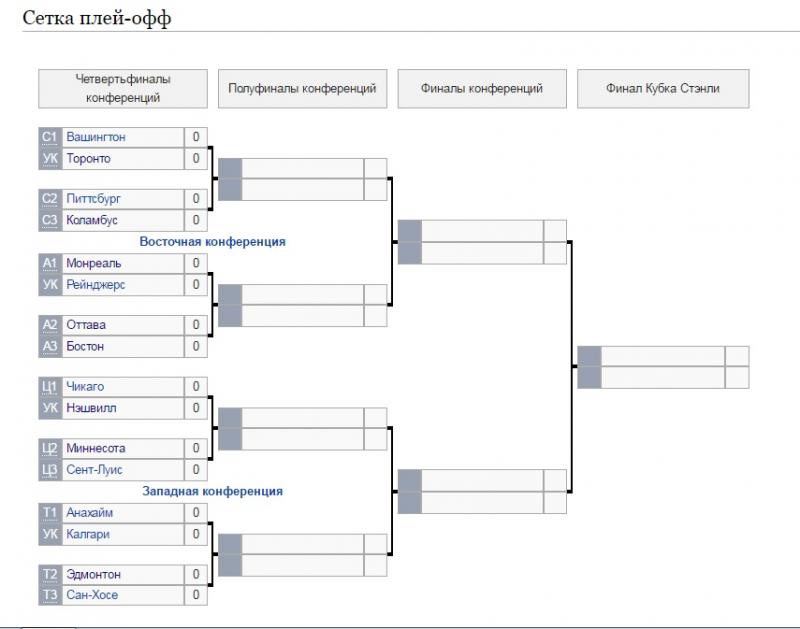

Sergey Bobrovskiy 20 Luchshikh Vratarey Pley Off N Kh L

May 15, 2025

Sergey Bobrovskiy 20 Luchshikh Vratarey Pley Off N Kh L

May 15, 2025 -

Nhl And Ndax Partner For Stanley Cup Playoffs In Canada

May 15, 2025

Nhl And Ndax Partner For Stanley Cup Playoffs In Canada

May 15, 2025 -

Pley Off N Kh L Tampa Bey Kucherov Oderzhivaet Pobedu Nad Floridoy

May 15, 2025

Pley Off N Kh L Tampa Bey Kucherov Oderzhivaet Pobedu Nad Floridoy

May 15, 2025 -

Tampa Bey Vybivaet Floridu Kucherov Vedyot Komandu K Pobede V Serii Pley Off

May 15, 2025

Tampa Bey Vybivaet Floridu Kucherov Vedyot Komandu K Pobede V Serii Pley Off

May 15, 2025