Revolutionizing Voice Assistant Development: OpenAI's 2024 Breakthrough

Table of Contents

Enhanced Natural Language Understanding (NLU)

OpenAI's improved NLU models are enabling voice assistants to understand nuanced language, context, and intent with unprecedented accuracy. This leap forward is driven by significant advancements in deep learning and the refinement of existing AI models. The result? Voice assistants that are far more intuitive and capable than ever before.

- Improved handling of colloquialisms and slang: Gone are the days of rigid keyword matching. OpenAI's models now understand the subtleties of informal language, slang, and idiomatic expressions, leading to more natural and fluid conversations.

- Better understanding of complex sentences and multiple requests: Users can now issue complex, multi-part instructions without the assistant getting lost in translation. The improved NLU capabilities allow for the parsing and interpretation of intricate requests, significantly enhancing the usability of voice assistants.

- Reduced reliance on keyword-based triggering: Instead of relying on specific keywords, OpenAI's models understand the intent behind user utterances, leading to more robust and flexible interactions. This means you can ask the same question in different ways and still get the correct response.

- Increased accuracy in recognizing accents and dialects: OpenAI's models are trained on diverse datasets, significantly improving their ability to understand a wider range of accents and dialects. This inclusivity makes voice assistants accessible to a far broader audience.

Further explanation: OpenAI's advancements in transformer models, particularly the fine-tuning of large language models (LLMs) like GPT-4 and its successors, have played a crucial role in these improvements. Benchmarks show significant gains in accuracy compared to previous generations of NLU technology, measured by metrics such as intent classification accuracy and entity recognition precision. These improvements leverage techniques like transfer learning and advanced training methodologies resulting in improved robustness and generalization capabilities. Keywords: Natural Language Understanding, NLU, conversational AI, AI models, transformer models, deep learning

Contextual Awareness and Memory

Modern voice assistants are moving beyond single-turn interactions. OpenAI's innovations are providing them with superior contextual memory and the ability to maintain conversation flow across multiple turns. This means the assistant remembers previous interactions, creating a more engaging and personalized experience.

- Improved ability to remember previous interactions within a conversation: The voice assistant can now recall earlier parts of the conversation, allowing for a more natural and coherent dialogue.

- Enhanced understanding of user preferences and history: By remembering past interactions, the assistant can personalize its responses and offer more relevant suggestions. This adaptive learning builds a more tailored experience over time.

- More personalized and relevant responses: The contextual awareness leads to responses that are directly related to the ongoing conversation, rather than generic or unrelated answers.

- Development of long-term memory capabilities: OpenAI's research is pushing the boundaries of contextual memory, exploring methods to retain information across multiple sessions and even over extended periods.

Further explanation: Techniques like memory networks and sophisticated attention mechanisms within transformer models are key to OpenAI's advancements in conversational memory. These techniques enable the AI to focus on relevant parts of previous interactions when formulating its responses, resulting in a richer and more meaningful conversation. The implications for improved user experience are substantial, leading to more helpful and engaging interactions. Keywords: Contextual awareness, conversational memory, long-term memory, personalization, user experience

Improved Personalization and Customization

OpenAI's advancements allow for more tailored voice assistant experiences, adapting to individual user needs and preferences. This personalization goes beyond simple preference settings, creating truly individualized interactions.

- Personalized recommendations and suggestions: The assistant learns your preferences over time and offers tailored recommendations for everything from music and movies to news and tasks.

- Adaptive learning based on user behavior: The more you use the assistant, the better it understands your needs and adapts its responses accordingly.

- Customization of voice and personality traits: Future iterations may offer choices in voice tone and even personality characteristics, allowing for a more personalized and engaging experience.

- Integration with personal calendars, contacts, and other data: Seamless integration with your personal information allows the assistant to provide highly relevant and contextually appropriate responses.

Further explanation: Machine learning algorithms are at the heart of this personalization. By analyzing user data (with appropriate privacy protections), the system identifies patterns and preferences, creating a truly customized experience. However, it’s crucial to address the ethical implications of data collection and use, ensuring user privacy and transparency. Keywords: Personalization, customization, machine learning, user data, privacy, ethical considerations

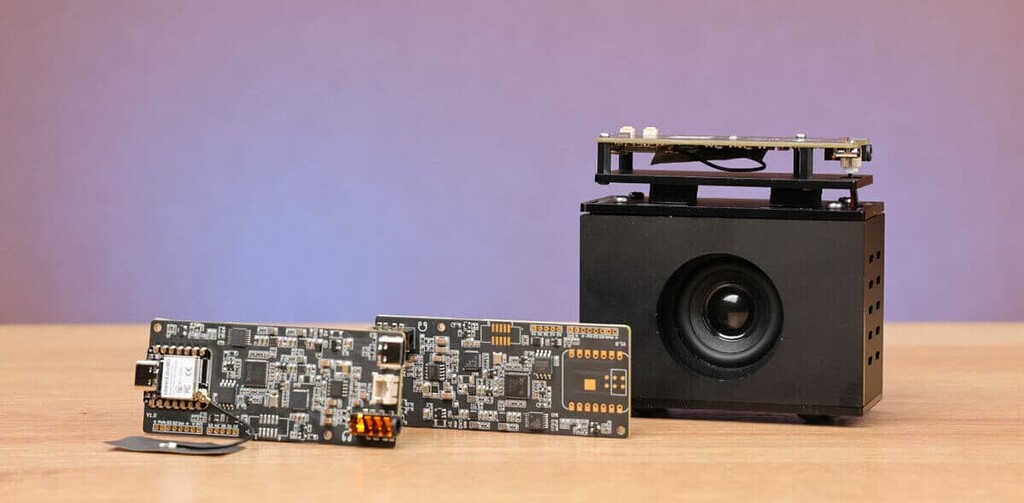

Multimodal Integration

OpenAI's research extends beyond text and voice, enabling the integration of visual and other sensory data to create richer, more interactive voice assistant experiences. This multimodal approach opens up a world of possibilities for how we interact with technology.

- Integration with image recognition and object detection: The assistant can now understand visual information, allowing for commands like "show me pictures of cats" or "turn off the lights in the living room" (by recognizing the room visually).

- Understanding of visual context within conversations: The ability to process visual data adds a new layer of context to conversations, significantly enhancing the assistant's understanding.

- Enhanced capabilities for smart home control and automation: Multimodal integration simplifies smart home management, allowing for more intuitive control through voice and visual cues.

- Potential for augmented reality and virtual reality applications: The future holds exciting possibilities for integrating voice assistants seamlessly into AR and VR experiences, creating truly immersive interactions.

Further explanation: Multimodal AI combines data from multiple modalities, such as vision and audio, to create a more holistic understanding of the user's environment and intentions. This integration allows for more complex and context-rich interactions, transforming the possibilities for voice assistant applications. Keywords: Multimodal AI, image recognition, object detection, smart home, AR, VR

Conclusion

OpenAI's breakthroughs in 2024 are significantly impacting voice assistant development, pushing the boundaries of what's possible in human-computer interaction. Enhanced NLU, contextual awareness, personalization, and multimodal integration are transforming these tools from simple command interpreters into sophisticated conversational partners. This evolution promises a future where voice assistants are seamlessly integrated into our lives, providing personalized assistance and enriching our daily experiences. To stay ahead in this rapidly evolving field, explore OpenAI's latest advancements in voice assistant development and discover how you can leverage these innovations to create the next generation of intelligent interfaces.

Featured Posts

-

5 Key Rumors Surrounding Chat Gpt Release Date Features Pricing And Beyond

May 19, 2025

5 Key Rumors Surrounding Chat Gpt Release Date Features Pricing And Beyond

May 19, 2025 -

The Fsu Shooting Examining The Life Of A Victim And His Familys Past

May 19, 2025

The Fsu Shooting Examining The Life Of A Victim And His Familys Past

May 19, 2025 -

De Soto County Leading The State With Statewide Broadband Access

May 19, 2025

De Soto County Leading The State With Statewide Broadband Access

May 19, 2025 -

Brockwell Park Campaigner Wins Key Legal Case

May 19, 2025

Brockwell Park Campaigner Wins Key Legal Case

May 19, 2025 -

I Istoriki Simasia Toy Eyaggelismoy Sta Ierosolyma

May 19, 2025

I Istoriki Simasia Toy Eyaggelismoy Sta Ierosolyma

May 19, 2025