Is AI Therapy A Surveillance Tool In A Police State?

Table of Contents

The Promise of AI Therapy

AI therapy holds significant promise for revolutionizing mental healthcare. Its potential to democratize access and personalize treatment is undeniable.

Improved Access to Mental Healthcare

AI-powered mental health platforms offer unprecedented access to care, particularly for underserved populations. The benefits are manifold:

- 24/7 Availability: AI chatbots and virtual therapists are available anytime, anywhere, eliminating geographical barriers and overcoming scheduling conflicts.

- Personalized Treatment Plans: AI algorithms can analyze patient data to tailor treatment plans to individual needs and preferences, optimizing outcomes.

- Anonymity and Reduced Stigma: The anonymity offered by online platforms can encourage individuals who might otherwise hesitate to seek help due to stigma to engage in therapy.

- Reduced Cost: AI-based interventions can be significantly more affordable than traditional therapy, making mental healthcare more accessible to those with limited financial resources.

These advancements in accessible mental healthcare, driven by AI-powered teletherapy, are a significant step forward.

Data-Driven Treatment

AI algorithms excel at analyzing vast amounts of patient data, identifying patterns and trends that might be missed by human clinicians. This data-driven approach offers several advantages:

- Early Detection of Mental Health Issues: AI can analyze patient responses and identify early warning signs of mental health problems, enabling timely intervention.

- Improved Treatment Efficacy: Personalized treatment plans, informed by AI-driven insights, can lead to more effective outcomes.

- Objective Assessment of Progress: AI tools can objectively track patient progress, providing valuable data for clinicians and patients alike.

The potential of AI diagnostics and predictive analytics in mental health is immense, leading to more effective personalized medicine.

The Surveillance Concerns of AI Therapy

While the benefits of AI therapy are compelling, the potential for misuse, particularly in a police state context, is a serious concern.

Data Privacy and Security

AI therapy platforms collect vast amounts of sensitive personal data, including intimate thoughts, feelings, and experiences. This data is vulnerable to breaches and misuse:

- Unauthorized Access: Cyberattacks and data breaches could expose sensitive patient information, leading to identity theft, discrimination, or blackmail.

- Lack of Robust Security Measures: Many AI platforms lack robust security measures to protect patient data from unauthorized access.

- Potential for Government Surveillance: Governments could exploit vulnerabilities in AI platforms to monitor citizens' mental health and identify individuals deemed "at risk" or dissenting. This potential for data security in AI systems is a pressing concern.

HIPAA compliance for AI systems must be rigorously enforced to mitigate these risks.

Potential for Misuse by Authoritarian Regimes

In authoritarian regimes, AI therapy data could be weaponized for surveillance and oppression:

- Tracking Political Opponents: Governments might use AI to monitor the mental health of political opponents, identifying individuals considered "unstable" or "threatening."

- Profiling Individuals Based on Mental Health: AI could be used to profile individuals based on their mental health status, leading to discrimination and social exclusion.

- Predicting and Preventing "Undesirable" Behaviors: AI algorithms might be used to predict and prevent behaviors deemed undesirable by the state, potentially leading to preemptive arrests or other forms of repression.

The intersection of AI and authoritarianism presents a grave threat to individual freedom.

Lack of Transparency and Accountability

Many AI algorithms used in mental health are "black boxes," making it difficult to understand how they work and hold developers accountable for potential biases or misuse:

- Algorithmic Bias: AI algorithms can perpetuate existing societal biases, leading to unfair or discriminatory outcomes.

- Absence of Clear Regulations: The lack of clear regulations and ethical guidelines for the development and deployment of AI therapy tools leaves significant room for misuse.

- Difficulty in Holding Developers Accountable: It can be challenging to hold developers accountable for the unintended consequences or misuse of their AI systems.

The need for AI ethics and regulation of AI in healthcare is paramount.

Balancing Innovation and Privacy

To harness the benefits of AI therapy while mitigating the risks, we must prioritize data protection and ethical considerations.

The Need for Strong Data Protection Laws

Strong data protection laws are essential to prevent the misuse of AI therapy data:

- Data Anonymization Requirements: Strict regulations requiring data anonymization can safeguard patient privacy.

- Strict Consent Protocols: Patients must have clear and informed consent regarding the collection, use, and sharing of their data.

- Independent Oversight Bodies: Independent oversight bodies should monitor the development and deployment of AI therapy tools to ensure compliance with ethical standards and data protection laws.

The importance of data privacy legislation and patient data protection cannot be overstated.

Ethical Guidelines for AI Development

Ethical considerations must guide the development and deployment of AI therapy tools:

- Transparency: AI algorithms should be transparent and understandable.

- Fairness: AI systems should be designed to be fair and unbiased.

- Accountability: There must be clear mechanisms for accountability in case of misuse or harm.

- User Control: Users should have control over their data and the ability to access and correct it.

Responsible AI and ethical AI development are crucial for ensuring the beneficial use of AI in healthcare.

Conclusion

AI therapy offers significant potential for improving access to and personalizing mental healthcare. However, the risks of surveillance and misuse, particularly in a police state context, are substantial. The future of AI therapy hinges on striking a balance between innovation and individual rights. We need strong data protection laws, ethical guidelines for AI development, and robust oversight mechanisms to prevent the misuse of this powerful technology. The future of AI therapy depends on prioritizing ethical considerations and robust data protection. Let's ensure AI mental health tools remain beneficial rather than tools of oppression. Let's advocate for responsible AI and ethical AI development in mental health.

Featured Posts

-

How Ha Seong Kims Friendship With Blake Snell Aids Korean Players In The Mlb

May 15, 2025

How Ha Seong Kims Friendship With Blake Snell Aids Korean Players In The Mlb

May 15, 2025 -

Dodgers Inf Hyeseong Kims Call Up Reports And Analysis

May 15, 2025

Dodgers Inf Hyeseong Kims Call Up Reports And Analysis

May 15, 2025 -

De Gevolgen Van De Actie Tegen Npo Directeur Frederieke Leeflang

May 15, 2025

De Gevolgen Van De Actie Tegen Npo Directeur Frederieke Leeflang

May 15, 2025 -

Los Angeles Dodgers Postseason Moves And Email Communication Review

May 15, 2025

Los Angeles Dodgers Postseason Moves And Email Communication Review

May 15, 2025 -

Investigating The Use Of Apple Watches By Nhl Referees

May 15, 2025

Investigating The Use Of Apple Watches By Nhl Referees

May 15, 2025

Latest Posts

-

Ndax Becomes Official Partner Of The Nhl Playoffs In Canada

May 15, 2025

Ndax Becomes Official Partner Of The Nhl Playoffs In Canada

May 15, 2025 -

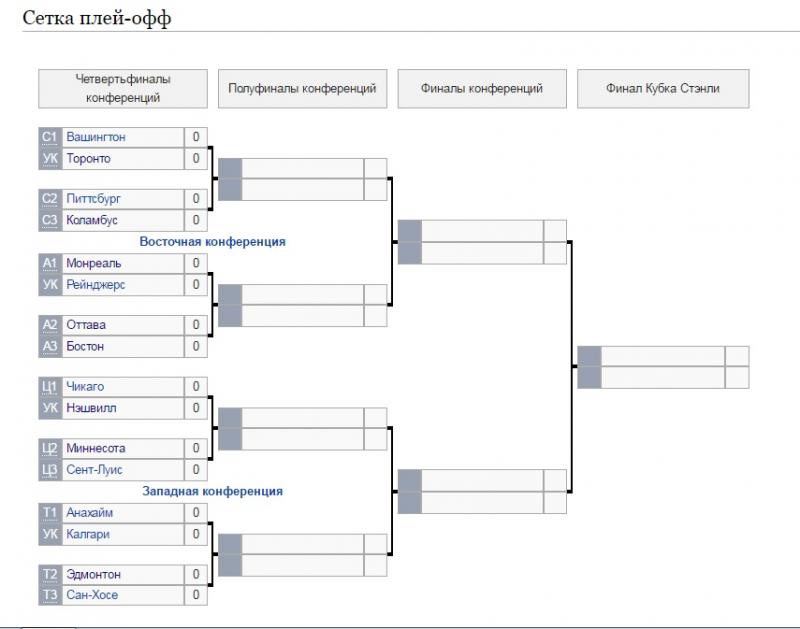

Sergey Bobrovskiy 20 Luchshikh Vratarey Pley Off N Kh L

May 15, 2025

Sergey Bobrovskiy 20 Luchshikh Vratarey Pley Off N Kh L

May 15, 2025 -

Nhl And Ndax Partner For Stanley Cup Playoffs In Canada

May 15, 2025

Nhl And Ndax Partner For Stanley Cup Playoffs In Canada

May 15, 2025 -

Pley Off N Kh L Tampa Bey Kucherov Oderzhivaet Pobedu Nad Floridoy

May 15, 2025

Pley Off N Kh L Tampa Bey Kucherov Oderzhivaet Pobedu Nad Floridoy

May 15, 2025 -

Tampa Bey Vybivaet Floridu Kucherov Vedyot Komandu K Pobede V Serii Pley Off

May 15, 2025

Tampa Bey Vybivaet Floridu Kucherov Vedyot Komandu K Pobede V Serii Pley Off

May 15, 2025