FTC Probe Into OpenAI: Implications For The Future Of AI And Data Privacy

Table of Contents

OpenAI's Data Practices Under the Microscope

OpenAI, a leading AI research company, has garnered significant attention for its groundbreaking language models, like GPT-3 and GPT-4. However, its data collection methods and the potential vulnerabilities within its systems are now under intense scrutiny by the FTC. OpenAI's models are trained on massive datasets comprising text and code scraped from the internet, including user inputs from various sources. This raises serious concerns regarding data privacy and the potential for misuse.

The types of data collected include:

- User inputs: Conversations, prompts, and other data provided by users interacting with OpenAI's models.

- Training data: Vast amounts of text and code from publicly available sources, potentially including personal information.

- Model outputs: The responses generated by OpenAI's models, which may reflect or reproduce biases present in the training data.

Privacy advocates and regulators have highlighted several critical concerns:

- Lack of transparency in data usage: Users may not fully understand how their data is being collected, used, and protected.

- Potential for bias and discrimination in AI models: Biases in the training data can lead to discriminatory outcomes in the AI's output.

- Insufficient measures to protect sensitive user data: OpenAI's security measures may not be robust enough to prevent data breaches or unauthorized access.

- Ethical implications of using personal data for AI training: The use of personal data without explicit consent raises significant ethical questions.

The FTC's Investigative Powers and Potential Outcomes

The FTC possesses broad authority to investigate unfair and deceptive business practices, including those related to data privacy. The agency's investigation into OpenAI could result in several potential outcomes. OpenAI could face significant financial penalties, such as fines, for violating data privacy laws or engaging in deceptive practices. The FTC could also impose restrictions on OpenAI's data collection practices, mandating stricter safeguards and increased transparency. Furthermore, the investigation may lead to substantial changes in OpenAI's data handling procedures to ensure compliance with relevant regulations.

Possible outcomes include:

- Consent decrees: OpenAI might be required to enter into a consent decree, agreeing to specific changes in its data practices.

- Significant financial penalties: Substantial fines could be levied for violations of data privacy laws.

- Legal precedents: The FTC's findings could set important legal precedents that establish stricter standards for AI data handling across the industry.

Broader Implications for the AI Industry and Data Privacy Regulations

The FTC's investigation into OpenAI will undoubtedly have a ripple effect throughout the AI industry. Other AI companies are likely to face increased regulatory scrutiny, prompting them to review and enhance their own data privacy practices. The probe could accelerate the development and implementation of stricter data protection laws globally, impacting innovation and shaping the future of responsible AI development.

The potential future landscape includes:

- Increased demand for AI ethics and data privacy expertise: Companies will need professionals skilled in navigating complex data privacy regulations and ethical AI development.

- Development of stricter data protection laws for AI: New laws and regulations are likely to emerge, potentially mirroring GDPR or CCPA's provisions, but specifically designed for the unique challenges posed by AI.

- Increased focus on explainable AI (XAI) and model transparency: There will be a stronger emphasis on making AI models more transparent and understandable, reducing the "black box" problem.

- Greater user control over personal data used in AI systems: Users should have greater control over how their data is used in the training and operation of AI systems.

The Path Forward: Balancing Innovation and Data Protection

Balancing AI innovation with robust data privacy safeguards is a critical challenge. This requires a multifaceted approach involving proactive measures, industry collaboration, and strong regulatory frameworks. Solutions include prioritizing user consent, implementing data minimization principles, and using privacy-enhancing technologies (PETs) to protect sensitive information. Robust data governance frameworks and enhanced transparency mechanisms are also crucial.

Recommendations for responsible AI development:

- Proactive implementation of PETs: Techniques like differential privacy and federated learning can minimize the risk of data breaches and protect user privacy.

- Robust data governance frameworks: Clear policies, procedures, and accountability mechanisms are essential to manage data responsibly.

- Increased transparency and user control mechanisms: Users need clear and accessible information about how their data is being used, along with mechanisms to control their data.

- Industry-wide collaboration on ethical AI guidelines: Collaboration among AI developers, ethicists, and policymakers is critical for creating responsible AI development guidelines.

Conclusion: Navigating the Future with Responsible AI Development After the FTC Probe into OpenAI

The FTC probe into OpenAI's data practices is a significant development with far-reaching implications for the AI industry and data privacy regulations. It underscores the urgent need for responsible AI development, emphasizing the importance of transparency, user consent, and robust data protection measures. The future of AI hinges on a balance between fostering innovation and safeguarding individual rights. This requires proactive steps by AI companies, strengthened regulatory frameworks, and increased public awareness. To learn more about data privacy, AI ethics, and the ongoing FTC investigation into OpenAI and similar investigations into other AI companies, explore resources from the FTC, privacy advocacy groups, and academic institutions dedicated to responsible AI development. Understanding OpenAI's data practices and the FTC's AI regulations is critical for navigating this evolving landscape. The path forward necessitates a commitment to responsible AI development that prioritizes both innovation and the protection of individual privacy.

Featured Posts

-

Wise Tech To Acquire E2open For 2 1 Billion Details Of The Debt Funded Deal

May 27, 2025

Wise Tech To Acquire E2open For 2 1 Billion Details Of The Debt Funded Deal

May 27, 2025 -

Altsjyl Fy Msabqt Bryd Aljzayr 2025 Alkhtwat Walwthayq Almtlwbt

May 27, 2025

Altsjyl Fy Msabqt Bryd Aljzayr 2025 Alkhtwat Walwthayq Almtlwbt

May 27, 2025 -

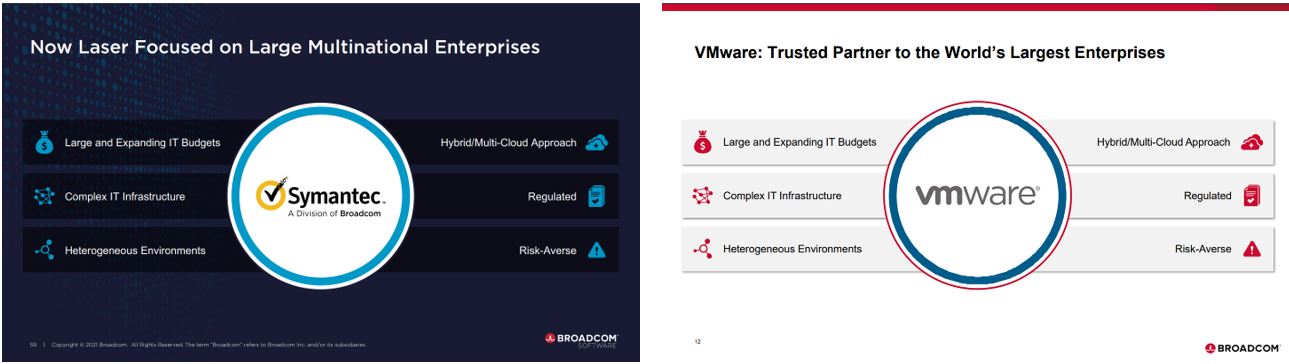

1 050 Price Hike At And T Details Broadcoms Impact On V Mware Costs

May 27, 2025

1 050 Price Hike At And T Details Broadcoms Impact On V Mware Costs

May 27, 2025 -

Osimhen To Remain At Napoli Fabrizio Romanos Confirmation

May 27, 2025

Osimhen To Remain At Napoli Fabrizio Romanos Confirmation

May 27, 2025 -

First Look Taylor Swift Teases Reputation Taylors Version

May 27, 2025

First Look Taylor Swift Teases Reputation Taylors Version

May 27, 2025