Debunking The Myth Of AI Learning: A Path To Responsible AI

Table of Contents

Understanding How AI "Learns"

AI systems don't "learn" in the same way humans do. They don't possess genuine understanding or consciousness. Instead, AI learning relies heavily on data and sophisticated algorithms. Let's examine the core components:

The Role of Data

AI systems are trained on massive datasets, identifying patterns and relationships within the data to make predictions or decisions. This process is fundamentally different from human learning, which involves reasoning, intuition, and contextual understanding. The quality, quantity, and diversity of data are paramount for accurate AI learning.

- Supervised Learning: The algorithm learns from labeled data, where input data is paired with the correct output. Examples include image recognition (images labeled with object descriptions) and spam detection (emails labeled as spam or not spam).

- Unsupervised Learning: The algorithm explores unlabeled data to find patterns and structures. Applications include customer segmentation (grouping customers based on purchasing behavior) and anomaly detection (identifying unusual data points).

- Reinforcement Learning: The algorithm learns through trial and error, receiving rewards or penalties for its actions. This is often used in robotics and game playing, where the AI learns optimal strategies through interaction with an environment.

Datasets used in different AI applications vary drastically. For example, self-driving car AI relies on enormous datasets of images and sensor data, while a medical diagnosis AI uses patient records and medical images. The quality of this data directly impacts the accuracy and reliability of the AI system, highlighting the importance of data curation and preprocessing in responsible AI development.

Algorithms and Models

Various algorithms power AI systems, each with its own strengths and limitations. Understanding these algorithms is crucial for responsible AI development.

- Neural Networks: Inspired by the structure of the human brain, neural networks consist of interconnected nodes that process and transmit information. Deep learning, a subfield of machine learning, utilizes deep neural networks with multiple layers to analyze complex data.

- Decision Trees: These algorithms create a tree-like model to classify data based on a series of decisions. They're relatively simple to understand and interpret, making them suitable for explainable AI (XAI) applications.

- Support Vector Machines (SVMs): SVMs are powerful algorithms used for classification and regression tasks. They find the optimal hyperplane that separates different data classes.

Many AI algorithms operate as "black boxes," meaning their internal workings are opaque and difficult to interpret. This lack of transparency raises concerns about accountability and trust. The need for explainable AI (XAI) is becoming increasingly critical to address this "black box" problem and ensure responsible AI deployment.

Addressing Bias in AI Learning

AI systems are not immune to bias. In fact, biases can be subtly introduced at various stages of AI development, leading to unfair or discriminatory outcomes.

Sources of Algorithmic Bias

Bias can stem from several sources:

- Biased Datasets: If the training data reflects existing societal biases, the AI system will likely perpetuate and even amplify those biases. For instance, a facial recognition system trained primarily on images of white faces may perform poorly on faces of other ethnicities.

- Biased Algorithms: The algorithms themselves can introduce bias, particularly if they are not carefully designed and tested.

- Human Biases in Design and Implementation: Human developers can unintentionally introduce biases through their choices in data selection, algorithm design, and system implementation. This underscores the importance of diverse and inclusive development teams.

Real-world examples of AI bias abound, from loan applications unfairly rejecting minority applicants to biased recruitment tools favoring certain demographic groups. These instances highlight the critical need for proactive bias mitigation strategies.

Mitigating Bias in AI Development

Addressing bias requires a multi-faceted approach:

- Data Augmentation: Increasing the diversity and representation of data can help mitigate bias. This involves adding data points that are underrepresented in the original dataset.

- Fairness-Aware Algorithms: Developing algorithms specifically designed to minimize bias is crucial. These algorithms incorporate fairness metrics to ensure equitable outcomes.

- Rigorous Testing and Validation: Thorough testing and validation on diverse datasets are essential to identify and address bias before deployment.

- Diverse Development Teams: A diverse team of developers can help identify and mitigate biases that might be missed by a homogenous group.

Techniques like adversarial training (training the AI to resist adversarial attacks that exploit its biases) and fairness metrics (quantifying fairness in AI systems) are increasingly used to improve the fairness and equity of AI systems.

Ensuring Transparency and Explainability in AI

Transparency and explainability are paramount for building trust in AI systems. Knowing how an AI system arrived at a particular decision is crucial for accountability and debugging.

The Need for Explainable AI (XAI)

The complexity of many AI models, particularly deep neural networks, makes it challenging to understand their decision-making processes. XAI aims to address this challenge by providing insights into the internal workings of AI systems. This improves debugging capabilities, increases trust among users, and aids in regulatory compliance.

Techniques for Achieving Transparency

Several techniques contribute to greater transparency:

- Feature Importance Analysis: Identifying the features that most influence the AI system's predictions helps understand its reasoning.

- Model Visualization: Visualizing the model's structure and internal representations can improve understanding.

- Rule Extraction: Extracting understandable rules from the model can provide insights into its decision-making process.

- Local Interpretable Model-agnostic Explanations (LIME): LIME explains individual predictions by approximating the complex model locally with a simpler, interpretable model.

Building a Framework for Responsible AI Development

Creating a framework for responsible AI development requires a holistic approach encompassing ethical guidelines, practical implementations, and continuous monitoring.

Ethical Guidelines and Frameworks

Several organizations have developed ethical guidelines for AI development:

- AI Now Institute: Focuses on the social implications of AI technologies.

- OpenAI: A research company committed to ensuring that artificial general intelligence benefits all of humanity.

- IEEE: The Institute of Electrical and Electronics Engineers has developed ethical guidelines for autonomous and intelligent systems.

Core ethical principles for responsible AI include fairness, accountability, transparency, privacy, and robustness.

Implementing Responsible AI Practices

Building responsible AI systems necessitates continuous effort:

- Regular Audits: Periodic audits ensure compliance with ethical guidelines and identify potential biases.

- Ongoing Monitoring: Continuous monitoring of AI systems in real-world deployments is crucial to detect and address unforeseen issues.

- User Feedback Mechanisms: Incorporating user feedback allows for continuous improvement and adaptation to user needs and concerns.

- Ethical Review Boards: Establishing ethical review boards to oversee AI development and deployment can provide valuable oversight.

Conclusion

The myth of AI learning as a process of independent, sentient evolution is far from the truth. AI systems learn from data and algorithms, a process susceptible to biases and lacking in genuine understanding. Responsible AI development demands that we acknowledge these limitations and address them proactively. Through transparency, explainability, and ethical considerations, we can build AI systems that benefit humanity. By understanding how AI "learns" and adopting a proactive approach to ethical AI development, we can move beyond the myths and create a future where AI serves as a powerful tool for good. Let's continue to work together, debunking the myths surrounding AI learning and forging a path towards truly responsible AI.

Featured Posts

-

Isabelle Autissier Une Vision Collaborative Du Leadership

May 31, 2025

Isabelle Autissier Une Vision Collaborative Du Leadership

May 31, 2025 -

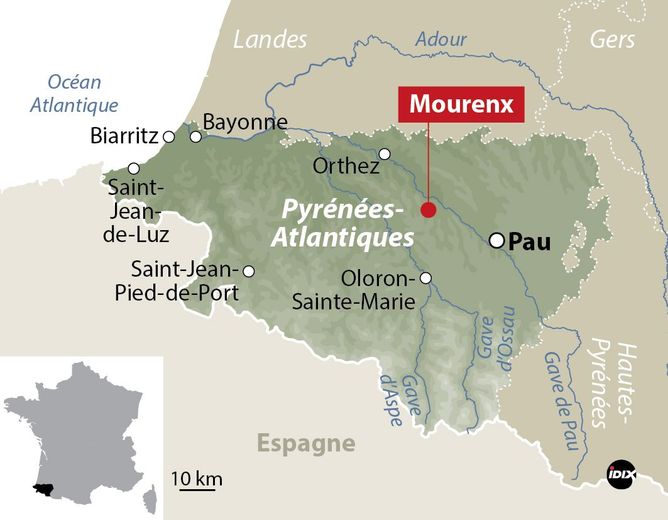

Rejets Toxiques De Sanofi L Entreprise Nie Toute Infraction

May 31, 2025

Rejets Toxiques De Sanofi L Entreprise Nie Toute Infraction

May 31, 2025 -

Buying And Selling Glastonbury Resale Tickets The Ultimate Guide

May 31, 2025

Buying And Selling Glastonbury Resale Tickets The Ultimate Guide

May 31, 2025 -

Foire Au Jambon Bayonne 2025 Analyse Des Frais D Organisation Et Du Deficit Budgetaire

May 31, 2025

Foire Au Jambon Bayonne 2025 Analyse Des Frais D Organisation Et Du Deficit Budgetaire

May 31, 2025 -

Summer Arts And Entertainment Guide Plan Your Summer Fun Now

May 31, 2025

Summer Arts And Entertainment Guide Plan Your Summer Fun Now

May 31, 2025