Critical Perspectives On AI Learning: Building A Future Of Responsible AI

Table of Contents

Bias and Fairness in AI Learning

AI systems learn from data, and if that data reflects existing societal biases, the AI will perpetuate and even amplify those inequalities. This inherent bias in datasets leads to unfair and discriminatory outcomes. For example, facial recognition systems have been shown to be less accurate at identifying individuals with darker skin tones, while loan application algorithms have been criticized for disproportionately denying loans to certain demographic groups. The ethical implications are profound, raising concerns about fairness, justice, and equal opportunity.

- Examples of biased AI systems:

- Facial recognition systems exhibiting racial bias.

- Loan application algorithms perpetuating financial inequality.

- Recruitment tools showing gender bias.

- Techniques for mitigating bias:

- Data augmentation to increase representation of underrepresented groups.

- Algorithmic fairness techniques like adversarial debiasing.

- Careful selection and curation of training datasets.

- Importance of diverse and representative datasets: Ensuring datasets accurately reflect the diversity of the population is crucial for building fair and unbiased AI systems. This requires proactive efforts to collect and utilize data from diverse sources and communities.

Addressing bias requires more than just technical solutions. Algorithmic accountability and transparency are essential. We need mechanisms to understand how AI systems make decisions and to hold developers accountable for any discriminatory outcomes. The development of fairness-aware algorithms is paramount, demanding a multidisciplinary approach involving computer scientists, ethicists, and social scientists.

Data Privacy and Security in AI Development

The vast amounts of data required to train sophisticated AI models raise significant privacy concerns. AI development often involves collecting, storing, and processing sensitive personal information, creating vulnerabilities to misuse and breaches. This tension between data access for AI advancement and individual privacy rights necessitates a careful and responsible approach.

- Regulations like GDPR and CCPA: These regulations impose strict requirements on how personal data can be collected, used, and protected, significantly impacting AI development. Compliance is crucial for responsible AI practices.

- Data anonymization and differential privacy techniques: These methods aim to protect individual privacy while still allowing for data analysis and AI training. However, their effectiveness varies and ongoing research is crucial.

- Importance of secure data storage and handling: Robust security measures are paramount to prevent data breaches and misuse. This includes encryption, access controls, and regular security audits.

The responsible use of data in AI development demands a strong ethical framework. Developers must prioritize data minimization, transparency about data usage, and robust security measures. A balance must be struck between facilitating innovation and protecting fundamental privacy rights.

The Impact of AI on Employment and the Economy

The automation potential of AI is undeniable, leading to concerns about widespread job displacement across various sectors. While AI will create new jobs, the transition requires careful management to avoid significant social and economic disruption.

- Jobs most at risk of automation: Routine, repetitive tasks in manufacturing, transportation, and customer service are particularly vulnerable.

- The need for reskilling and upskilling initiatives: Investing in education and training programs is critical to equip workers with the skills needed for the jobs of the future. This requires collaboration between governments, educational institutions, and the private sector.

- Opportunities for creating new jobs in the AI sector: The development, implementation, and maintenance of AI systems will generate numerous new job roles requiring specialized skills.

The economic implications of widespread AI adoption are complex. Proactive measures are needed to address potential job losses and to ensure a fair distribution of the benefits of AI. This includes exploring potential solutions such as universal basic income or other social safety nets to support those affected by automation.

Accountability and Transparency in AI Systems

Understanding how AI systems reach their conclusions is critical for building trust and ensuring accountability. Explainable AI (XAI) aims to make AI decision-making processes more transparent and understandable. However, achieving transparency in complex AI models, like deep neural networks, remains a significant challenge.

- Challenges in achieving transparency in complex AI models: The "black box" nature of many AI systems makes it difficult to trace the logic behind their decisions.

- The need for clear lines of accountability in case of AI errors or harm: Establishing clear responsibilities for the outcomes of AI systems is essential for addressing potential harm.

- Methods for making AI systems more interpretable: Researchers are actively developing techniques to make AI models more transparent, but this remains an active area of research.

Ethical responsibility rests with both developers and users of AI systems. Developers must strive for transparency and build systems that can be audited and understood. Users should be informed about the limitations and potential biases of AI systems. Preventing unintended consequences requires a proactive and collaborative approach.

The Role of Regulation in Promoting Responsible AI

Effective governance and regulation are crucial for guiding the development and deployment of AI responsibly. International collaboration is necessary to establish ethical standards and prevent a regulatory race to the bottom.

- Examples of existing and proposed AI regulations: The EU's AI Act and similar initiatives in other countries represent attempts to establish ethical guidelines and regulatory frameworks.

- The importance of international collaboration on AI governance: Global cooperation is necessary to ensure consistent and effective regulation of AI technologies.

- Balancing innovation with ethical considerations in AI policy: Regulations should promote innovation while safeguarding ethical principles and preventing harm.

Conclusion

This article highlighted critical perspectives on AI learning, emphasizing the urgent need for Responsible AI. Building trustworthy AI requires addressing biases, protecting data privacy, mitigating economic disruption, and ensuring accountability and transparency. Let's work together to foster a future driven by ethical AI development. By prioritizing Responsible AI in research, development, and deployment, we can harness the immense potential of AI while minimizing risks and building a more equitable and prosperous future for all. Join the conversation about Responsible AI and help shape the future of this transformative technology.

Featured Posts

-

New York Citys Wildfire Smoke Event Temperature Drop And Air Quality Impact

May 31, 2025

New York Citys Wildfire Smoke Event Temperature Drop And Air Quality Impact

May 31, 2025 -

Former Nypd Commissioner Bernard Kerik Hospitalized Update On His Condition

May 31, 2025

Former Nypd Commissioner Bernard Kerik Hospitalized Update On His Condition

May 31, 2025 -

Top Seed Zverev Eliminated By Griekspoor At Indian Wells

May 31, 2025

Top Seed Zverev Eliminated By Griekspoor At Indian Wells

May 31, 2025 -

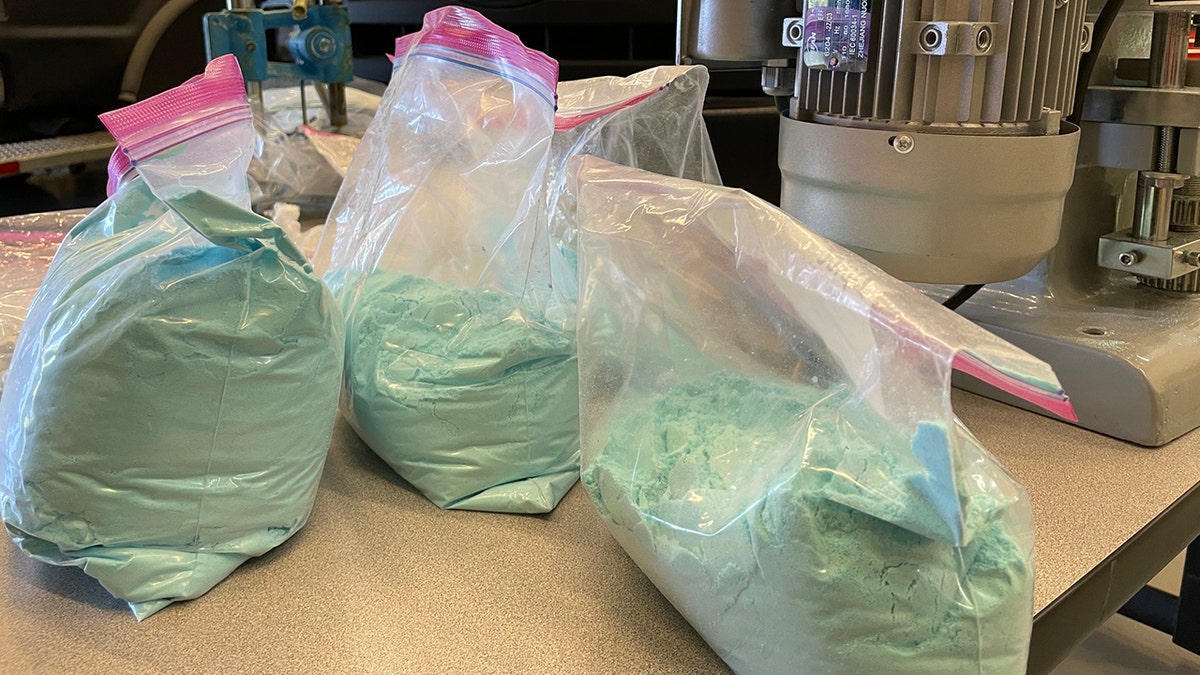

March 26th Report Details High Fentanyl Levels In Princes System

May 31, 2025

March 26th Report Details High Fentanyl Levels In Princes System

May 31, 2025 -

Munguias Revenge Dominant Victory Over Surace In Rematch

May 31, 2025

Munguias Revenge Dominant Victory Over Surace In Rematch

May 31, 2025