ChatGPT And OpenAI Face FTC Investigation: Concerns And Future Outlook

Table of Contents

Data Privacy Concerns and ChatGPT's Data Handling

OpenAI's data collection practices concerning ChatGPT raise significant privacy issues. ChatGPT learns from vast amounts of text data scraped from the internet, including personal information, potentially violating users' privacy rights. This raises the potential for data breaches, exposing sensitive user data to malicious actors. The lack of robust security measures adds to these concerns. Furthermore, OpenAI's data usage policies aren't always transparent, leaving users uncertain about how their data is being used and shared. This lack of transparency is a primary concern for regulators. Relevant regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the US mandate greater transparency and user control over personal data. OpenAI's compliance with these regulations is now under intense scrutiny by the FTC.

- Lack of transparency in data usage: Users often lack clear understanding of how their interactions with ChatGPT contribute to the model's training data.

- Potential for sensitive data exposure: The model's training data may inadvertently include sensitive personal information, putting individuals at risk.

- Concerns regarding children's data privacy: The use of ChatGPT by minors raises specific concerns about the protection of their data under laws like COPPA (Children's Online Privacy Protection Act).

Algorithmic Bias and Fairness in ChatGPT's Outputs

AI models like ChatGPT are trained on massive datasets, and if these datasets reflect existing societal biases, the AI will inherit and amplify those biases. This can lead to unfair or discriminatory outputs. For instance, ChatGPT's responses might exhibit gender bias, consistently portraying women in stereotypical roles, or racial bias, generating content that reinforces harmful stereotypes. Such biases can have significant societal implications, perpetuating inequality and discrimination. The FTC investigation is examining whether OpenAI has adequately addressed these issues, ensuring fair and equitable outcomes from its AI technology.

- Gender bias in responses: ChatGPT might generate responses that reinforce traditional gender roles or stereotypes.

- Racial bias in generated content: The model could produce outputs that reflect and amplify existing racial biases.

- Bias in professional context suggestions: Suggestions for career paths or professional opportunities may reflect existing biases, potentially limiting opportunities for certain groups.

Consumer Protection Issues and Misinformation Spread by ChatGPT

ChatGPT's ability to generate human-quality text opens doors for malicious use. The potential for generating deepfakes, impersonating individuals, or spreading misinformation poses a significant threat to consumers. The lack of built-in safeguards to prevent this misuse is a key concern for the FTC. The commission's role is to protect consumers from deceptive or unfair practices, and the potential for ChatGPT to be used for harmful purposes falls squarely within its purview.

- Potential for deepfakes and impersonation: ChatGPT could be used to create realistic fake videos or audios, damaging reputations and spreading misinformation.

- Generation of misleading or false information: The model can be used to generate convincingly false narratives and propaganda.

- Lack of accountability for harmful outputs: Determining responsibility for harmful outputs generated by ChatGPT presents significant legal and ethical challenges.

The Future Outlook for OpenAI and AI Regulation

The FTC investigation will likely have a profound impact on OpenAI's future development and operations. The outcome could influence the company's data handling practices, its approach to mitigating algorithmic bias, and its implementation of safety protocols. The investigation will set a precedent for AI regulation, influencing how other AI companies develop and deploy their technologies. This investigation underscores the urgent need for stronger regulatory frameworks for AI, ensuring responsible innovation and mitigating potential harms.

- Increased transparency and accountability measures: OpenAI may be required to provide greater transparency regarding its data collection and usage practices.

- Development of more robust safety protocols: The company will likely need to implement stronger mechanisms to prevent the misuse of ChatGPT.

- Potential changes in model training methodologies: OpenAI may need to revise its model training methods to mitigate bias and ensure fairness.

Conclusion: The FTC Investigation and the Future of ChatGPT: A Call to Action

The FTC investigation into OpenAI and ChatGPT highlights critical concerns surrounding data privacy, algorithmic bias, and consumer protection in the rapidly evolving field of AI. The need for responsible AI development and strong regulatory frameworks is now more critical than ever. OpenAI, and the wider AI industry, must prioritize transparency, accountability, and ethical considerations in the development and deployment of AI technologies like ChatGPT. The future of ChatGPT and similar AI systems hinges on addressing these concerns effectively. Stay informed about the FTC investigation and the evolving landscape of ChatGPT's future, OpenAI's regulatory challenges, and the broader implications for AI ethical considerations and responsible AI development. The future of AI depends on our collective commitment to responsible innovation.

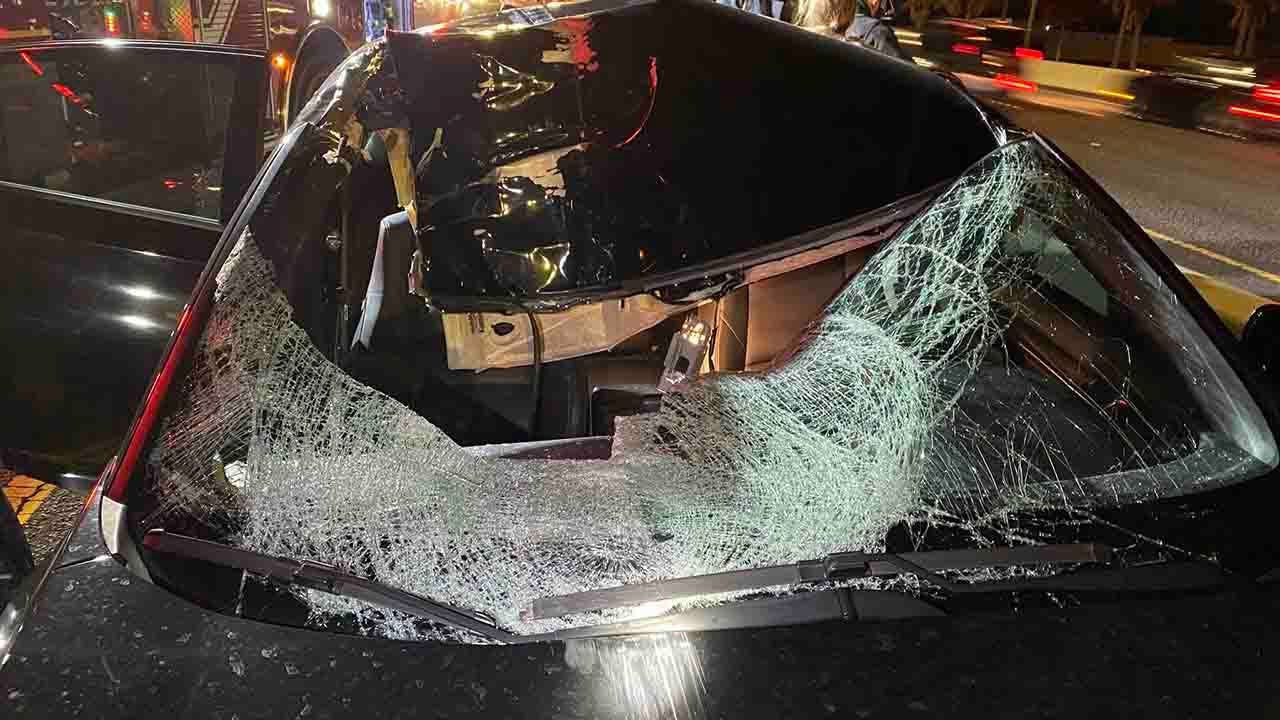

One Killed Several Hurt In Clearwater Ferry Collision

One Killed Several Hurt In Clearwater Ferry Collision

Thunder Over Louisville Fireworks Show Cancellation Announcement Due To Flooding

Thunder Over Louisville Fireworks Show Cancellation Announcement Due To Flooding

Nyt Spelling Bee February 28 2025 Solutions Answers And Pangram

Nyt Spelling Bee February 28 2025 Solutions Answers And Pangram

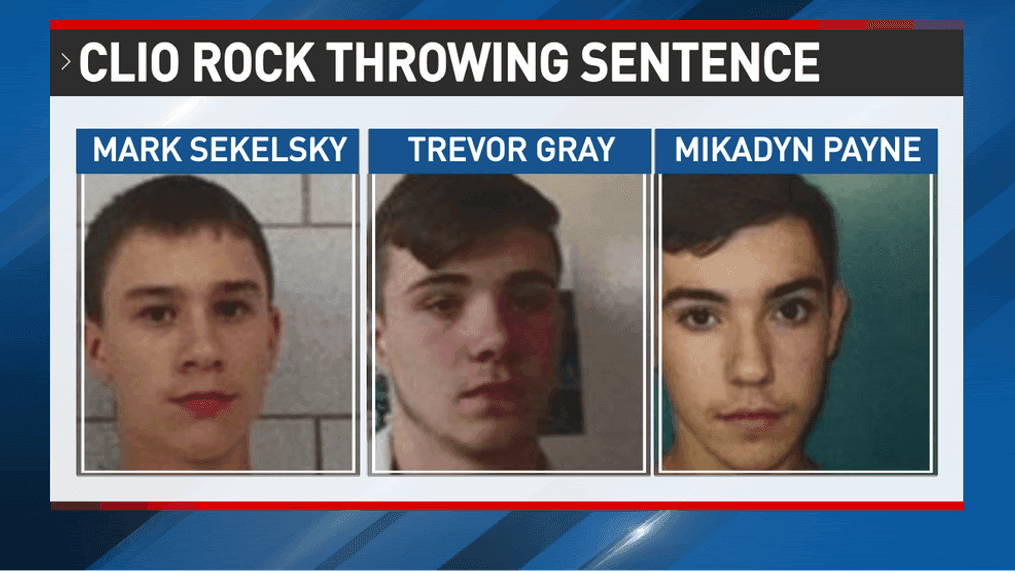

Murder Conviction After Deadly Teen Rock Throwing Game

Murder Conviction After Deadly Teen Rock Throwing Game

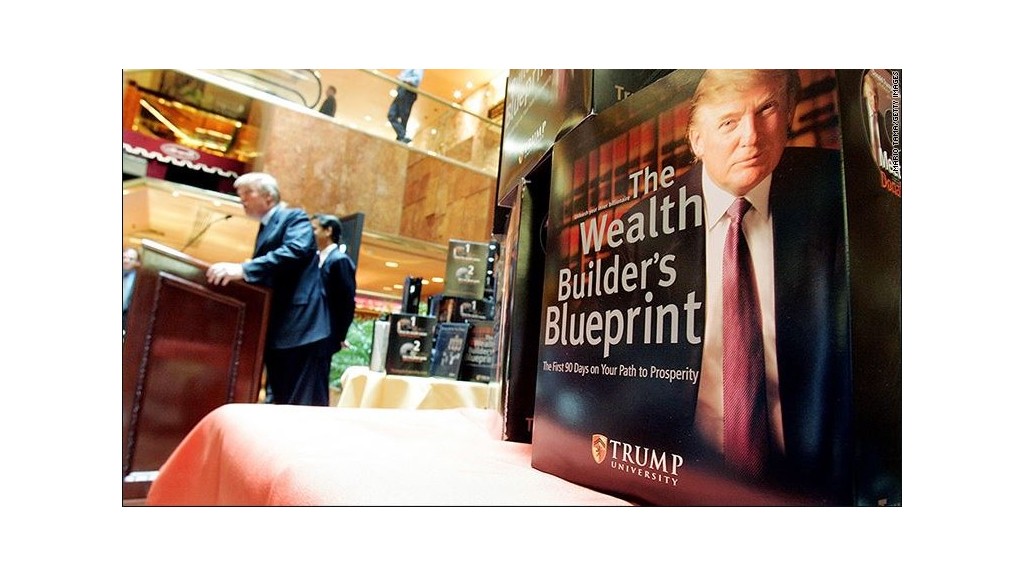

Prestigious Universities Create Private Network To Resist Trump

Prestigious Universities Create Private Network To Resist Trump