Are Tech Companies Responsible When Algorithms Radicalize Mass Shooters?

Table of Contents

- The Role of Algorithms in Online Radicalization

- Echo Chambers and Filter Bubbles

- Algorithmic Amplification of Hate Speech

- Legal and Ethical Responsibilities of Tech Companies

- Section 230 and its Limitations

- Ethical Considerations and Corporate Social Responsibility

- The Difficulty of Proving Causation

- Correlation vs. Causation

- The Importance of Holistic Approaches

- Conclusion

The Role of Algorithms in Online Radicalization

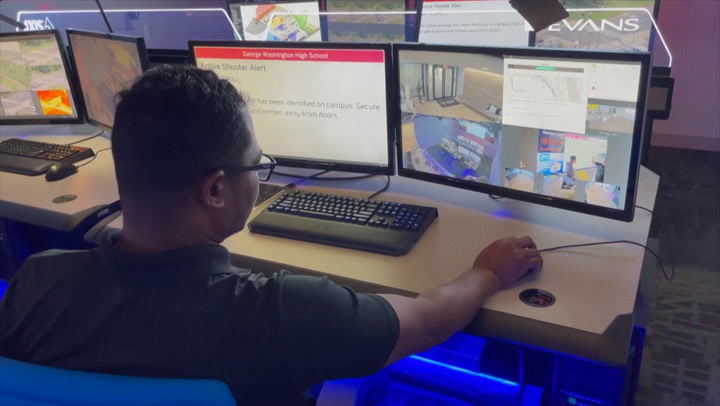

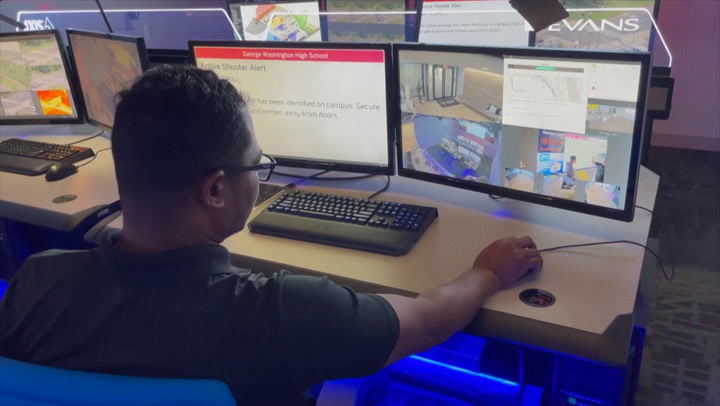

Algorithms, the invisible engines driving our online experiences, play a significant role in shaping what we see and how we interact with information online. This influence can be profoundly dangerous when it comes to extremist content.

Echo Chambers and Filter Bubbles

Algorithms, designed to maximize user engagement, often create echo chambers and filter bubbles. These personalized online environments reinforce pre-existing beliefs, limiting exposure to diverse perspectives.

- Examples: YouTube's recommendation system suggesting increasingly extreme videos; Facebook's newsfeed prioritizing content from like-minded groups; Twitter's algorithmic timeline reinforcing partisan viewpoints.

- Details: These systems work by tracking user behavior – likes, shares, comments, and time spent on specific content. This data is then used to predict what content will keep users engaged, often leading to a skewed and potentially dangerous information diet. Research consistently demonstrates the link between echo chambers and the polarization of opinions, making users more susceptible to extremist views.

Algorithmic Amplification of Hate Speech

Even with good intentions, algorithms can inadvertently – or even intentionally – amplify hate speech and violent extremist content. The sheer volume and speed of online information makes effective content moderation extremely challenging.

- Examples: The rapid spread of conspiracy theories and calls to violence on social media; the difficulty of identifying and removing manipulated media (deepfakes) that incite hatred; algorithms prioritizing engagement over accuracy, leading to the proliferation of harmful content.

- Details: Current AI-based content moderation tools struggle to keep pace with the creativity of those who produce hate speech and extremist materials. The focus on engagement metrics can also incentivize platforms to tolerate harmful content if it drives traffic and ad revenue.

Legal and Ethical Responsibilities of Tech Companies

The question of tech company accountability is complex and multifaceted, involving both legal and ethical considerations.

Section 230 and its Limitations

Section 230 of the Communications Decency Act shields online platforms from liability for content posted by their users. However, its applicability in the context of algorithm radicalization is fiercely debated.

- Arguments for reform: Critics argue that Section 230 provides tech companies with excessive protection, allowing them to profit from harmful content without taking sufficient responsibility for its moderation. They contend that the algorithms themselves contribute to the spread of extremism, thus requiring a reassessment of the law.

- Arguments against reform: Conversely, supporters of Section 230 warn that altering the law could stifle free speech and innovation, leading to a chilling effect on online expression. They argue that platforms already take significant steps to moderate content, but the scale of the problem makes complete eradication extremely difficult.

- Potential legal liability: The legal landscape is evolving, with increasing pressure on tech companies to demonstrate responsibility for the impact of their algorithms. Future lawsuits could redefine the extent of their liability for content related to algorithm radicalization.

Ethical Considerations and Corporate Social Responsibility

Beyond legal obligations, tech companies face significant ethical responsibilities. Their algorithms have the power to shape societies, and ignoring the potential for harm is ethically indefensible.

- Proactive measures: Tech companies should invest heavily in developing more sophisticated content moderation tools, improving transparency in algorithm design, and proactively identifying and addressing patterns of online radicalization.

- Transparency and accountability: Openness about how algorithms work and their impact on content distribution is crucial. This allows for better scrutiny and accountability.

- Investment in research: More research is needed to understand the complex interplay between algorithms, online behavior, and the radicalization process. Tech companies should fund this research and share their findings openly.

The Difficulty of Proving Causation

One of the major hurdles in assigning responsibility for algorithm radicalization is the difficulty of proving direct causation.

Correlation vs. Causation

While there's a clear correlation between exposure to extremist content online and violent acts, proving direct causation is incredibly complex.

- Confounding factors: Many factors contribute to radicalization, including pre-existing mental health issues, social isolation, and exposure to real-world extremist groups. It’s challenging to isolate the influence of algorithms.

- Complexity of human behavior: Human decision-making is multifaceted, and reducing radicalization to a simple algorithm-driven process ignores the complexities of human psychology and societal influences.

- Limitations of research: The ethical and practical challenges of conducting research in this area hamper our understanding of the precise relationship between algorithms and violence.

The Importance of Holistic Approaches

Addressing algorithm radicalization requires a multi-pronged approach that goes beyond simply blaming algorithms.

- Mental health services: Providing accessible mental healthcare is crucial in addressing underlying vulnerabilities that make individuals susceptible to extremist ideologies.

- Community support: Strengthening community bonds and promoting social inclusion can help mitigate the isolating factors that drive individuals towards extremism.

- Media literacy education: Equipping individuals with critical thinking skills and media literacy helps them discern credible information from propaganda and misinformation.

- Government regulation: Well-considered regulations can help hold tech companies accountable while protecting free speech.

Conclusion

The connection between algorithms, online radicalization, and mass shootings is undeniably complex. While proving direct causation remains challenging, the evidence strongly suggests that algorithms can contribute to the spread of extremist ideologies and create environments conducive to radicalization. Tech companies have a moral and potentially legal responsibility to mitigate this risk through improved content moderation, greater transparency, and proactive efforts to combat online extremism. The issue of algorithm radicalization demands continued research, open discussion, and a collaborative approach involving tech companies, policymakers, and civil society to address this critical challenge. Stay informed, advocate for change, and demand accountability from those who shape our online world.

Runes Dominant Victory Indian Wells Masters Triumph Over Tsitsipas

Runes Dominant Victory Indian Wells Masters Triumph Over Tsitsipas

Bodensee Wasserstand Aktuelle Entwicklungen Und Zukuenftige Trends

Bodensee Wasserstand Aktuelle Entwicklungen Und Zukuenftige Trends

Ai Doesnt Really Learn Understanding The Implications For Responsible Use

Ai Doesnt Really Learn Understanding The Implications For Responsible Use

Summer Reading List 2024 30 Books Critics Recommend

Summer Reading List 2024 30 Books Critics Recommend

Nypds Bernard Kerik Hospitalized Update On His Condition And Expected Recovery

Nypds Bernard Kerik Hospitalized Update On His Condition And Expected Recovery