Algorithm-Driven Radicalization: Holding Tech Companies Accountable For Mass Shootings

Table of Contents

The Amplifying Effect of Algorithmic Recommendation Systems

Algorithmic recommendation systems, the unseen engines powering social media and video-sharing platforms, play a significant role in shaping online experiences. These systems, designed to maximize user engagement, often inadvertently (and sometimes intentionally) promote extremist content through personalized feeds. This creates a dangerous cycle of radicalization.

-

Filter bubbles and echo chambers: Algorithms reinforce pre-existing biases by primarily showing users content aligning with their past interactions. This limits exposure to diverse viewpoints and creates echo chambers where extremist ideologies are amplified without counter-narratives. This phenomenon contributes significantly to online radicalization.

-

Radicalization pipelines: Algorithms can subtly guide users down a "radicalization pipeline," starting with seemingly benign content and gradually suggesting more extreme materials. This insidious process can lead individuals down a path toward violence without their conscious awareness of the trajectory.

-

Examples of specific algorithms and platforms: While specific algorithms are often proprietary and undisclosed, research points to several platforms where extremist content has proliferated due to algorithmic amplification. Investigations often reveal patterns of suggested videos or posts moving from general discontent to increasingly violent rhetoric.

-

The use of A/B testing to optimize engagement: Tech companies frequently employ A/B testing to optimize user engagement. This process can unintentionally prioritize the most engaging content, even if that content is harmful or extremist. The pursuit of maximizing clicks and views often overshadows the potential consequences of promoting dangerous ideologies.

The Role of Data Privacy and Targeted Advertising in Fueling Radicalization

Data collection practices, particularly targeted advertising, present another crucial dimension in the problem of algorithm-driven radicalization. The vast amounts of personal data collected by tech companies can be exploited to reach and influence vulnerable individuals susceptible to extremist ideologies.

-

Microtargeting: Highly personalized ads can be used to spread propaganda and recruitment materials with laser-like precision, effectively bypassing traditional media and reaching individuals predisposed to certain beliefs.

-

Data breaches and the misuse of personal information: Data breaches expose personal information, which can be used by extremist groups to identify and target individuals for recruitment or manipulation. This highlights the vulnerability created by lax data security practices.

-

The lack of transparency in data collection and algorithmic decision-making processes: The opaque nature of data collection and algorithmic processes makes it difficult to understand how and why certain individuals are targeted with extremist content. This lack of transparency hinders efforts to address the issue effectively.

-

The ethical considerations of using data to target individuals with potentially harmful content: The ethical implications of using data to target individuals with content that could incite violence are profound. This raises important questions about the responsibility of tech companies in protecting users from harm.

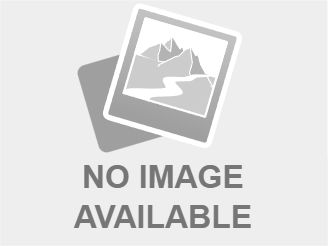

Legal and Regulatory Challenges in Holding Tech Companies Accountable

Holding tech companies accountable for algorithm-driven radicalization presents significant legal and regulatory challenges. Existing legal frameworks are often ill-equipped to address the complexities of this issue.

-

Section 230 of the Communications Decency Act and its limitations: Section 230 provides immunity to online platforms for user-generated content, making it difficult to hold them liable for extremist material. Reform efforts are underway to clarify and potentially modify its scope in relation to harmful content.

-

The challenges of proving direct causation between algorithmic exposure and violent acts: Establishing a direct causal link between exposure to extremist content via algorithms and violent acts is extremely difficult. This makes legal action challenging.

-

International legal considerations and the complexities of regulating global tech companies: The global reach of tech companies complicates regulatory efforts. Harmonizing international laws and regulations to address this issue effectively remains a substantial hurdle.

-

Potential legal strategies for holding tech companies accountable, including civil lawsuits and regulatory action: Potential strategies for accountability include civil lawsuits targeting companies for negligence or complicity in spreading extremist content, as well as enhanced regulatory frameworks focusing on algorithmic transparency and content moderation.

The Need for Enhanced Content Moderation and Transparency

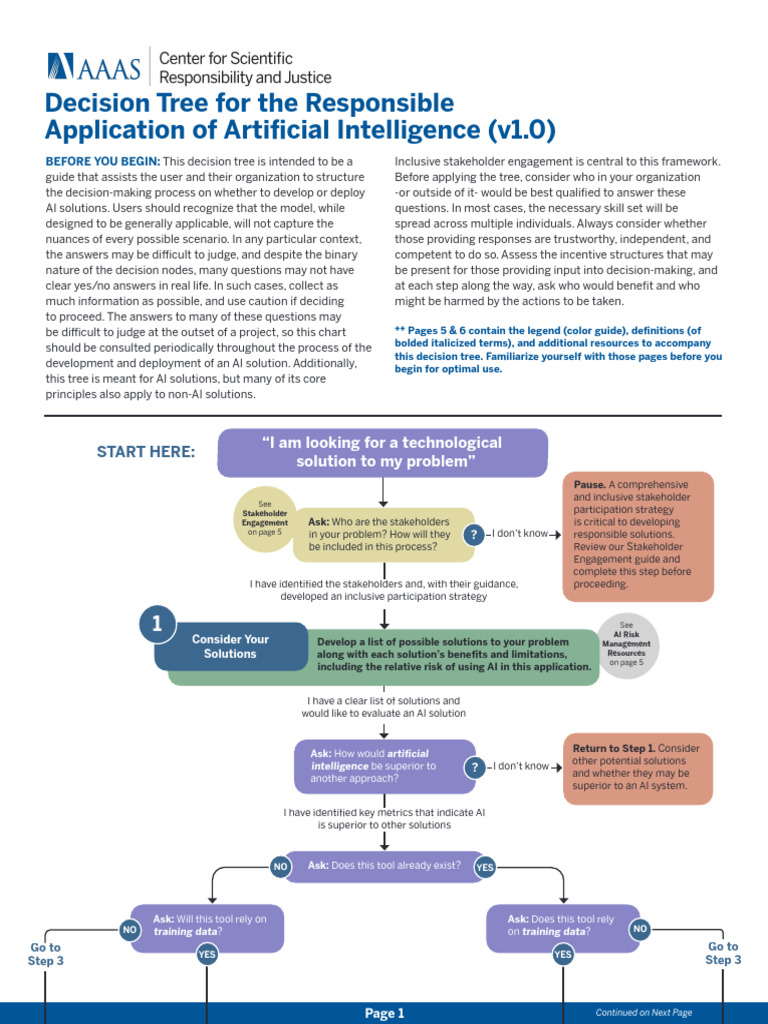

Addressing algorithm-driven radicalization requires a fundamental shift in how tech companies approach content moderation and algorithmic transparency.

-

Investing in human moderators and AI-based solutions for content detection and removal: Increased investment in human moderators, supplemented by sophisticated AI-based tools, is crucial for effectively detecting and removing extremist content.

-

Improving transparency in algorithmic decision-making to allow for greater scrutiny and accountability: Tech companies need to be more transparent about how their algorithms work and the criteria used to recommend content. This transparency is essential for accountability and effective oversight.

-

The development of industry-wide standards and best practices for content moderation: The development and adoption of industry-wide standards and best practices for content moderation would promote consistency and effectiveness across platforms.

-

The role of civil society organizations and researchers in monitoring and reporting on algorithmic bias and harmful content: Civil society organizations and researchers play a vital role in monitoring and reporting on algorithmic bias and harmful content, holding tech companies accountable and informing public discourse.

Conclusion

The link between algorithm-driven radicalization and mass shootings is a complex and deeply concerning issue. While tech companies are not solely responsible, their algorithms play a significant role in amplifying extremist views and potentially contributing to violence. Holding these companies accountable requires a multifaceted approach, encompassing strengthened legal frameworks, enhanced content moderation practices, and increased transparency. We must demand greater responsibility from tech companies in mitigating the risk of algorithm-driven radicalization, fostering healthier online environments, and preventing future tragedies. Ignoring the role of algorithms in this issue is a dangerous oversight. We need to act now to prevent further tragedies fueled by algorithm-driven radicalization.

Featured Posts

-

Glastonbury Coach Resale Tickets Timing Availability And Booking Guide

May 31, 2025

Glastonbury Coach Resale Tickets Timing Availability And Booking Guide

May 31, 2025 -

Kansas City Royals Games On Kctv 5 2024 Season Schedule

May 31, 2025

Kansas City Royals Games On Kctv 5 2024 Season Schedule

May 31, 2025 -

Building The Good Life Strategies For Lasting Well Being

May 31, 2025

Building The Good Life Strategies For Lasting Well Being

May 31, 2025 -

Understanding Ais Learning Process The Key To Responsible Application

May 31, 2025

Understanding Ais Learning Process The Key To Responsible Application

May 31, 2025 -

Former Nyc Police Commissioner Bernard Kerik Passes Away At 69

May 31, 2025

Former Nyc Police Commissioner Bernard Kerik Passes Away At 69

May 31, 2025