AI Therapy And The Surveillance State: Privacy Concerns And Ethical Dilemmas

Table of Contents

Data Security and Privacy Violations in AI Therapy

AI therapy platforms collect vast amounts of sensitive personal data, including detailed mental health histories, personal experiences, and even location data. This information is incredibly valuable, but also highly vulnerable. Data breaches, unauthorized access, and misuse pose significant risks. The storage, transmission, and usage of this data by third parties introduce further vulnerabilities. Current data protection regulations like GDPR and HIPAA aim to address these concerns, but their effectiveness in the rapidly evolving landscape of AI is constantly being tested.

- Data encryption weaknesses: Many platforms may not employ robust encryption methods, leaving sensitive data susceptible to hacking.

- Lack of transparency in data handling practices: Users often lack clear understanding of how their data is collected, used, and protected.

- Potential for data misuse by employers or insurance companies: Access to mental health data could lead to discrimination in employment or insurance coverage.

- The impact of data breaches on individuals' mental well-being: The emotional distress caused by a data breach can exacerbate existing mental health conditions.

Algorithmic Bias and Discrimination in AI Therapy

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithms will perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes for certain groups in access to care, diagnosis, and treatment. Algorithmic transparency and accountability are paramount to mitigate this risk.

- Examples of algorithmic bias in AI-powered diagnostic tools: A biased algorithm might misdiagnose or underdiagnose mental health conditions in specific demographic groups.

- The impact of biased algorithms on access to care for marginalized communities: Biased systems can lead to unequal access to crucial mental healthcare resources for vulnerable populations.

- The challenges of detecting and mitigating algorithmic bias: Identifying and correcting bias in complex AI algorithms is a significant technical and ethical challenge.

Informed Consent and the Limitations of AI Therapists

Informed consent, a cornerstone of ethical healthcare, presents unique challenges in the context of AI therapy. Can individuals truly understand the implications of sharing intimate details with an AI? Furthermore, AI therapists, however sophisticated, are limited in their ability to understand and respond to the nuances of human emotion and complex mental health issues. Human oversight is crucial to prevent misguidance and potential harm.

- Difficulties in obtaining truly informed consent from vulnerable individuals: Individuals experiencing severe mental health crises may not have the capacity to provide informed consent.

- The risk of over-reliance on AI therapists: Individuals might forgo necessary human interaction, potentially hindering their recovery.

- The potential for AI therapists to exacerbate existing mental health issues: Inaccurate or inappropriate responses from an AI could worsen a person's mental state.

- The need for clear guidelines on the use of AI in mental healthcare: Establishing robust ethical frameworks and regulations is essential.

Surveillance and the Erosion of Patient Confidentiality

The potential for AI therapy platforms to be used for surveillance purposes by governments or private entities is deeply concerning. Data mining and profiling of users' mental health information raise significant ethical questions about privacy and freedom of thought. The blurring lines between therapy and surveillance creates a chilling effect on open and honest communication, essential for effective treatment.

- Data collection for law enforcement purposes: Access to sensitive mental health data could be misused for surveillance and profiling by law enforcement agencies.

- The potential for employer surveillance: Employers might use AI therapy data to monitor employees' mental health and potentially discriminate against those with mental health conditions.

- The blurring of boundaries between therapy and surveillance: The lack of clear boundaries creates a chilling effect, discouraging open communication.

- The chilling effect on open communication in therapy sessions: Fear of surveillance could prevent individuals from honestly disclosing their thoughts and feelings.

Conclusion: The Future of AI Therapy and Protecting Patient Privacy

AI therapy holds immense potential to revolutionize mental healthcare access and affordability. However, the ethical dilemmas and privacy concerns surrounding data security, algorithmic bias, informed consent, and surveillance cannot be ignored. Safeguarding patient data and ensuring responsible development and implementation of AI-powered mental healthcare tools are paramount. We need increased regulation, transparency, and ethical guidelines to mitigate risks while harnessing the benefits of AI therapy responsibly. Learn more about the ethical implications of AI therapy and advocate for better data privacy protections. Engage in informed discussions surrounding the use of AI therapy and the surveillance state – the future of mental healthcare depends on it.

Featured Posts

-

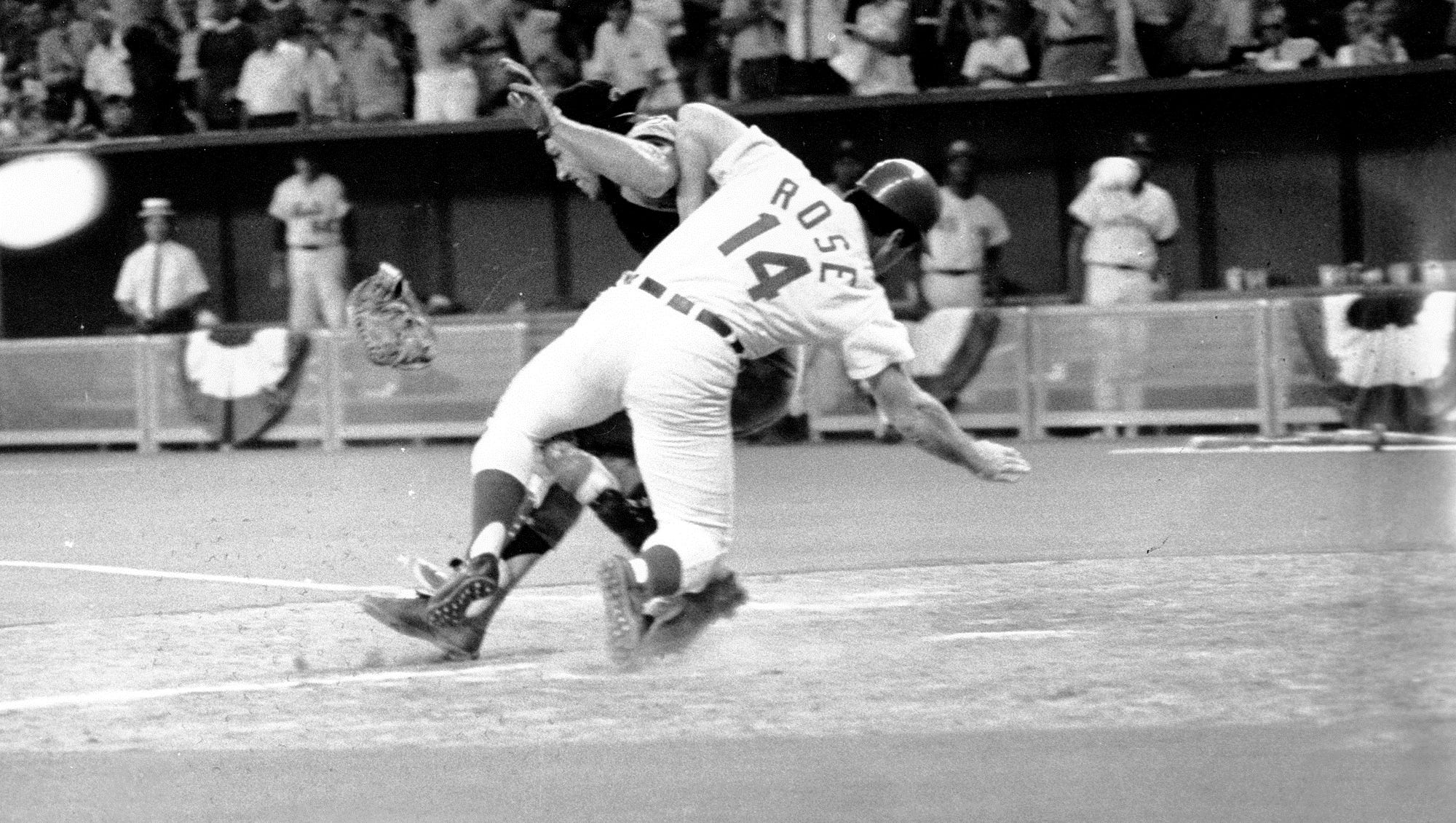

Hyeseong Kim Called Up What To Expect From The Dodgers Top Prospect

May 16, 2025

Hyeseong Kim Called Up What To Expect From The Dodgers Top Prospect

May 16, 2025 -

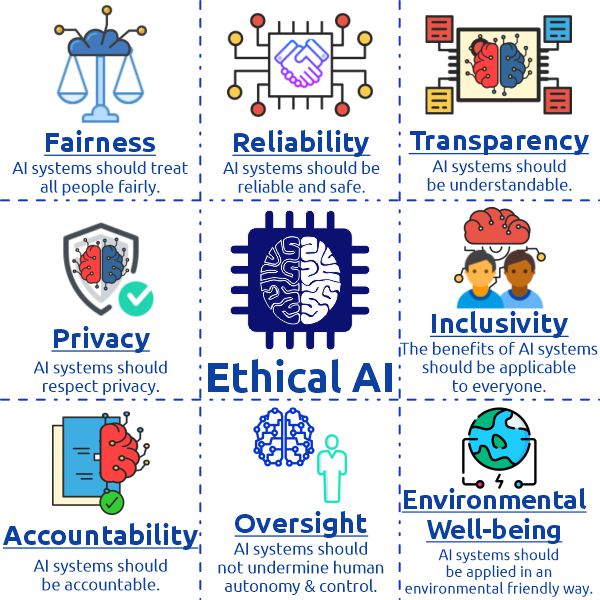

Congos Cobalt Export Policy Shift Implications For The Global Market

May 16, 2025

Congos Cobalt Export Policy Shift Implications For The Global Market

May 16, 2025 -

26 Eama Tfsl Bynhma Tfasyl Elaqt Twm Krwz Wana Dy Armas

May 16, 2025

26 Eama Tfsl Bynhma Tfasyl Elaqt Twm Krwz Wana Dy Armas

May 16, 2025 -

Ayesha Howard Raises Daughter With Anthony Edwards In Unique Living Situation

May 16, 2025

Ayesha Howard Raises Daughter With Anthony Edwards In Unique Living Situation

May 16, 2025 -

Mlb All Star Explains Why He Detested The Torpedo Bat

May 16, 2025

Mlb All Star Explains Why He Detested The Torpedo Bat

May 16, 2025

Latest Posts

-

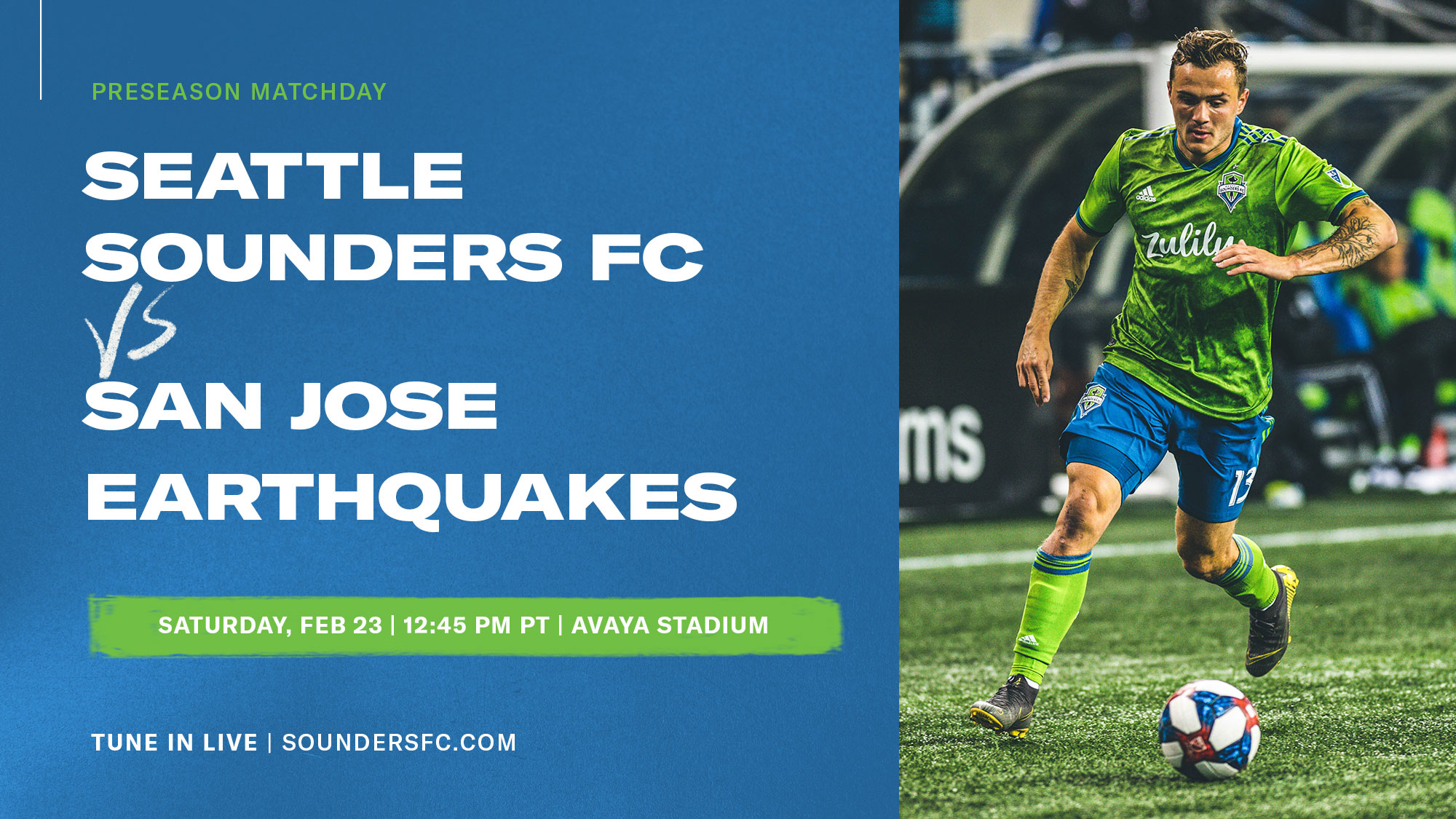

Mora Scores But Timbers Outmatched In 4 1 Loss To Earthquakes

May 16, 2025

Mora Scores But Timbers Outmatched In 4 1 Loss To Earthquakes

May 16, 2025 -

Earthquakes Dominant 4 1 Victory Over Portland Timbers

May 16, 2025

Earthquakes Dominant 4 1 Victory Over Portland Timbers

May 16, 2025 -

Everything You Need To Know Seattle Sounders San Jose Earthquakes

May 16, 2025

Everything You Need To Know Seattle Sounders San Jose Earthquakes

May 16, 2025 -

S Jv Sea Match Preview What To Expect When The Sounders Visit San Jose

May 16, 2025

S Jv Sea Match Preview What To Expect When The Sounders Visit San Jose

May 16, 2025 -

Pre Game Analysis San Jose Earthquakes Opposition Scouting Report

May 16, 2025

Pre Game Analysis San Jose Earthquakes Opposition Scouting Report

May 16, 2025