AI Therapy And The Surveillance State: A Critical Examination

Table of Contents

The Allure of AI in Mental Healthcare

The appeal of AI in mental health is undeniable. Its potential to revolutionize access and personalize treatment is significant.

Accessibility and Affordability

AI therapy apps offer convenient and cost-effective access to mental healthcare, particularly beneficial for underserved communities.

- Reduced geographical barriers: Individuals in remote areas or those with limited mobility can access therapy regardless of location.

- Lower cost compared to traditional therapy: AI-driven platforms often offer significantly lower costs than in-person sessions with therapists, making mental healthcare more accessible to a wider population.

- Increased availability of mental health resources: AI tools can supplement the work of human therapists, allowing them to handle a larger caseload and reach more people in need. This is particularly crucial in areas facing therapist shortages.

Personalized Treatment Approaches

AI algorithms can analyze vast amounts of user data to tailor treatment plans, potentially leading to more effective outcomes. This personalized approach is a key advantage of AI-powered therapy.

- Customized interventions based on individual needs: AI can adapt treatment strategies based on a user's specific symptoms, progress, and responses.

- Data-driven insights for better treatment strategies: By analyzing patterns and trends in user data, AI can help therapists identify effective interventions and adjust treatment plans accordingly.

- Potential for early detection of mental health issues: AI algorithms may be able to identify early warning signs of mental health issues, allowing for prompt intervention and prevention.

The Surveillance State Concerns

While the benefits are clear, the potential for AI therapy to contribute to a surveillance state is a serious concern. This is primarily due to the extensive data collection and potential for misuse.

Data Privacy and Security

The collection and storage of sensitive personal data by AI therapy platforms raise significant privacy concerns.

- Risk of data breaches exposing sensitive personal information: Large datasets containing intimate details about users' mental health are attractive targets for cyberattacks.

- Lack of transparency regarding data usage and storage practices: Many AI therapy apps lack transparency about how user data is used, stored, and protected.

- Potential for misuse of data by third parties: There's a risk that user data could be sold or misused by third-party companies without consent.

Algorithmic Bias and Discrimination

AI algorithms are trained on data, and if this data reflects existing societal biases, the algorithms may perpetuate and amplify these biases.

- Potential for biased diagnoses and treatment plans: An algorithm trained on biased data may misdiagnose or recommend inappropriate treatment for certain groups.

- Disproportionate impact on marginalized communities: Algorithmic bias can disproportionately affect already marginalized communities, exacerbating existing inequalities in mental healthcare access.

- Lack of accountability for algorithmic biases: It can be difficult to identify and hold accountable those responsible for biased algorithms.

Erosion of Patient Confidentiality

Constant monitoring and data collection inherent in AI therapy could erode traditional therapist-patient confidentiality.

- Potential for data to be used against individuals: Data collected during therapy sessions could potentially be used against individuals in legal or other contexts.

- Reduced trust in the therapeutic relationship: Concerns about data privacy could hinder open communication and reduce trust between patients and AI-powered systems.

- Impact on open communication during therapy sessions: Knowing that their words and emotions are being recorded and analyzed could inhibit self-disclosure, crucial for effective therapy.

Mitigating the Risks: Balancing Innovation and Ethical Considerations

Addressing the ethical concerns surrounding AI therapy requires a multi-pronged approach.

Robust Data Privacy Regulations

Implementing stricter regulations and guidelines around the collection, storage, and use of data in AI therapy is crucial. These regulations should be transparent and enforceable.

Transparency and User Control

Users should have a clear understanding of how their data is used and be given meaningful control over their data. This includes the ability to access, correct, and delete their data.

Algorithmic Auditing and Bias Mitigation

Regular auditing of algorithms to identify and address biases is vital to ensure fair and equitable access to mental healthcare. This requires collaboration between developers, ethicists, and mental health professionals.

Ethical Guidelines for AI Therapy

Developing a comprehensive ethical framework for the development and deployment of AI therapy systems is essential. This framework should prioritize user privacy, data security, and algorithmic fairness.

Conclusion

AI therapy holds transformative potential for mental healthcare, offering increased accessibility and personalized treatment. However, the integration of AI in this sensitive area necessitates careful consideration of the ethical implications, particularly concerning data privacy and surveillance. Balancing innovation with responsible development is paramount. Addressing concerns about algorithmic bias, data security, and patient confidentiality through robust regulations, transparency, and ethical guidelines is crucial to ensure that AI therapy serves as a force for good, rather than contributing to a surveillance state. We must engage in ongoing critical discussions about the future of AI Therapy Surveillance State, fostering a responsible and ethical approach to this emerging technology. The future of AI in mental healthcare depends on our commitment to protecting individual rights and ensuring equitable access to care.

Featured Posts

-

Report Hyeseong Kim Former Kbo Star Joins The Los Angeles Dodgers

May 15, 2025

Report Hyeseong Kim Former Kbo Star Joins The Los Angeles Dodgers

May 15, 2025 -

Atlanta Braves Vs San Diego Padres Predicting The Winner

May 15, 2025

Atlanta Braves Vs San Diego Padres Predicting The Winner

May 15, 2025 -

Erik And Lyle Menendez Resentencing A Real Possibility

May 15, 2025

Erik And Lyle Menendez Resentencing A Real Possibility

May 15, 2025 -

Analyzing Trumps Stance The Reality Of Us Canada Trade Relations

May 15, 2025

Analyzing Trumps Stance The Reality Of Us Canada Trade Relations

May 15, 2025 -

The Latest On Anthony Edwards And His Baby Mama Drama A Twitter Analysis

May 15, 2025

The Latest On Anthony Edwards And His Baby Mama Drama A Twitter Analysis

May 15, 2025

Latest Posts

-

Tramp Napad Vrz Mediumite I Zakana Za Sudstvoto

May 15, 2025

Tramp Napad Vrz Mediumite I Zakana Za Sudstvoto

May 15, 2025 -

Inavguratsiya Trampa Vs Vistava Otello Yak Zminivsya Zovnishniy Viglyad Dzho Baydena

May 15, 2025

Inavguratsiya Trampa Vs Vistava Otello Yak Zminivsya Zovnishniy Viglyad Dzho Baydena

May 15, 2025 -

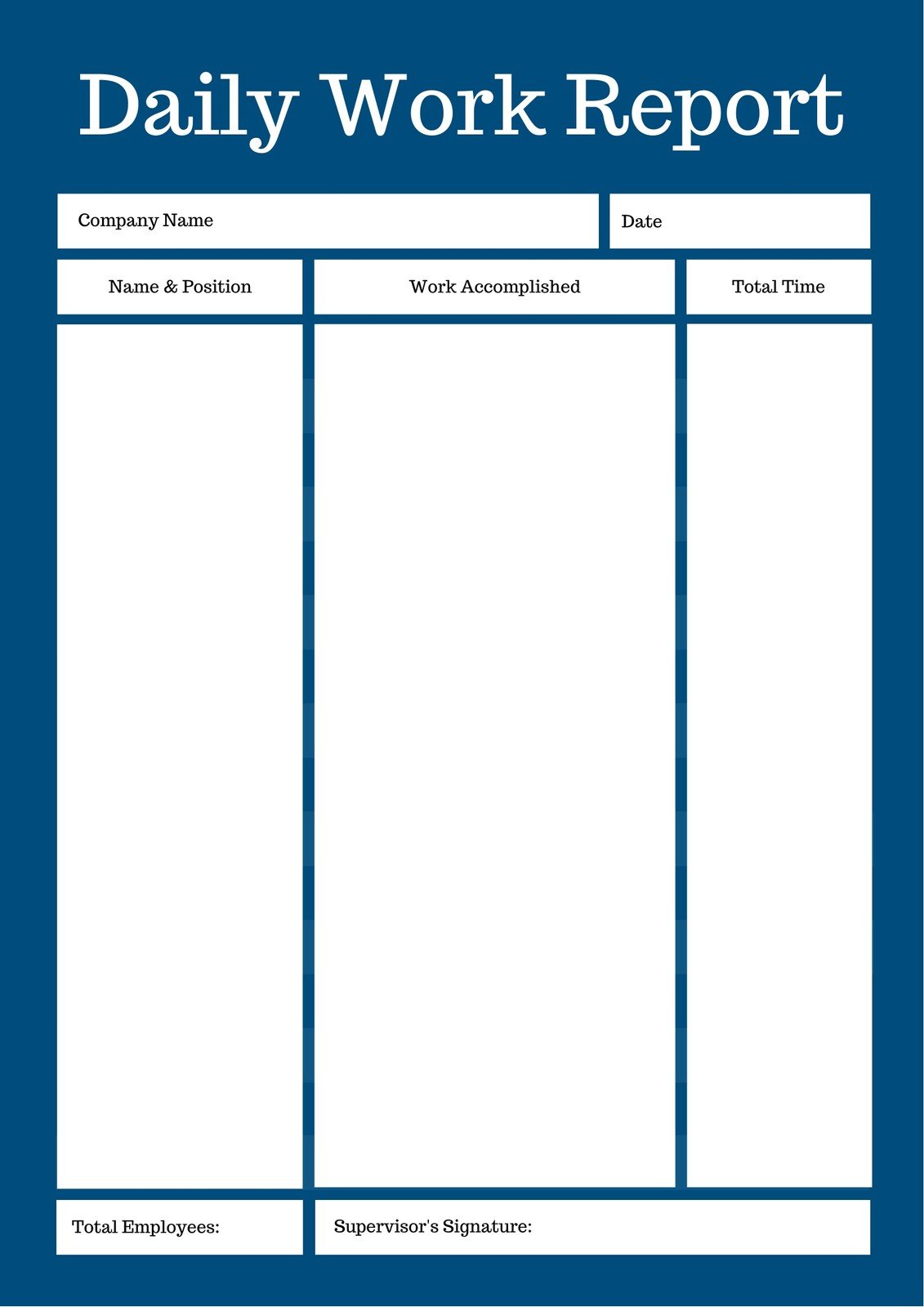

Breaking News Bangladesh China Caribbean Developments First Ups Daily Report

May 15, 2025

Breaking News Bangladesh China Caribbean Developments First Ups Daily Report

May 15, 2025 -

Dzho Bayden Na Vistavi Otello Analiz Yogo Obrazu Ta Porivnyannya Z Inavguratsiyeyu Trampa

May 15, 2025

Dzho Bayden Na Vistavi Otello Analiz Yogo Obrazu Ta Porivnyannya Z Inavguratsiyeyu Trampa

May 15, 2025 -

First Posts First Up Key Global Events Bangladesh China Caribbean And Beyond

May 15, 2025

First Posts First Up Key Global Events Bangladesh China Caribbean And Beyond

May 15, 2025