2024 OpenAI Developer Event Highlights: Effortless Voice Assistant Creation

Table of Contents

New OpenAI APIs for Streamlined Voice Interaction

The core of effortless voice assistant creation lies in powerful and easy-to-use APIs. OpenAI's latest offerings significantly simplify the development process:

Enhanced Speech-to-Text Capabilities

OpenAI has dramatically improved its speech-to-text capabilities, addressing key challenges in voice assistant development:

- Improved accuracy, even in noisy environments: The new APIs boast significantly higher accuracy rates, even when dealing with background noise or competing sounds. This is crucial for building reliable voice assistants that work effectively in real-world scenarios. This translates to better voice recognition and reduced errors in transcription.

- Support for multiple languages and accents: Developers can now build voice assistants capable of understanding a wider range of languages and accents, expanding the potential user base and global reach of their applications. This multilingual support is a significant step towards creating truly inclusive voice technology.

- Faster processing speeds for real-time applications: Reduced latency is key for a seamless user experience. The enhanced speed allows for near real-time responses, vital for interactive applications. Real-time voice interaction is now more achievable than ever.

- Integration with existing workflows for seamless implementation: OpenAI's APIs are designed for easy integration with existing development workflows, minimizing disruption and maximizing efficiency. This simplifies the process of incorporating speech-to-text functionality into existing projects.

Advanced Natural Language Understanding (NLU)

Understanding the intent behind user voice commands is paramount. OpenAI's advancements in NLU make this significantly easier:

- Improved intent recognition and entity extraction: The APIs accurately identify the user's intentions and extract relevant information from their speech, even in complex queries. This enhanced understanding is fundamental to building intelligent voice assistants.

- Contextual understanding for more natural conversations: The new APIs provide contextual awareness, enabling more natural and flowing conversations. The voice assistant can now understand the context of prior interactions, leading to more meaningful and personalized experiences.

- Support for complex dialogue management: Building sophisticated conversations is now simplified. Developers can create more engaging and dynamic interactions, handling complex dialogue flows with ease.

- Reduced reliance on extensive training data: OpenAI's pre-trained models require less training data, significantly reducing development time and effort. This lowers the barrier to entry for developers and speeds up the development cycle.

Simplified Text-to-Speech Synthesis

Converting text to natural-sounding speech is crucial for a positive user experience. OpenAI's improvements here are remarkable:

- Natural-sounding voices with improved intonation and emotion: The synthesized speech sounds more human and expressive, leading to a more engaging and satisfying interaction. This results in a more pleasant and natural user experience.

- Customization options for voice tone and style: Developers can tailor the voice to match their brand or application, offering personalized voice profiles for users. This allows for significant creative freedom and customization.

- Integration with various platforms and devices: Seamless integration with a wide range of platforms and devices ensures broad accessibility and reach for your voice assistant. Compatibility across multiple platforms is essential for widespread adoption.

- Reduced latency for smoother user experience: Minimized delays ensure smooth and responsive interactions, leading to a more enjoyable and intuitive experience for users. This enhanced responsiveness is key for a positive user experience.

Pre-trained Models for Rapid Prototyping

OpenAI provides powerful pre-trained models to significantly accelerate the development process:

Ready-to-Use Voice Assistant Templates

Starting from scratch can be time-consuming. OpenAI offers pre-built templates to expedite development:

- Pre-built models for common voice assistant functionalities (e.g., scheduling, reminders, information retrieval): This provides a solid foundation for common voice assistant tasks, enabling rapid prototyping and iterative development.

- Easy customization and adaptation to specific use cases: These templates are easily adapted to suit specific needs, allowing developers to focus on unique features and functionalities.

- Accelerated development cycles and reduced time-to-market: Faster development translates to quicker deployment and a faster return on investment.

- Lower barrier to entry for developers with varying skill levels: Even developers with limited experience can quickly build functional voice assistants, expanding access to this exciting technology.

Modular Components for Flexible Design

OpenAI's modular approach to voice assistant design allows for maximum flexibility:

- Individual modules for speech recognition, NLU, and TTS: Developers can select and combine modules to create bespoke voice assistants tailored to specific needs.

- Ability to mix and match modules to create unique voice assistants: This modularity supports highly customized and innovative voice interfaces.

- Facilitates iterative development and experimentation: Experimentation and iteration are simplified, enabling developers to quickly test and refine their designs.

- Supports building highly customized voice experiences: This approach enables the creation of truly unique and personalized voice assistant experiences.

Tools and Resources for Effortless Voice Assistant Development

OpenAI provides comprehensive resources to support developers throughout the entire development lifecycle:

Improved Documentation and Tutorials

Comprehensive documentation and tutorials are critical for successful development:

- Comprehensive guides and examples for developers of all levels: OpenAI offers resources catering to developers of all skill levels, from beginners to experts.

- Step-by-step instructions for integrating OpenAI APIs: Clear and concise instructions make the integration process smoother and less error-prone.

- Access to a supportive community forum for troubleshooting: A vibrant community provides support and assistance for developers facing challenges.

- Regular updates and improvements to ensure accessibility: OpenAI continuously updates its documentation and resources to maintain relevance and accessibility.

Enhanced Debugging and Monitoring Tools

Effective debugging and monitoring are key to building robust voice assistants:

- Real-time monitoring of voice assistant performance: This allows developers to track performance metrics and identify potential issues in real-time.

- Identification and resolution of errors and issues: The enhanced debugging tools facilitate the efficient identification and resolution of errors.

- Improved insights into user interactions and feedback: Understanding user interactions provides valuable data for optimizing the voice assistant's design and performance.

- Data-driven optimization for continuous improvement: Using user data and performance metrics enables developers to continually improve their voice assistants over time.

Conclusion

The 2024 OpenAI Developer Event significantly advanced the landscape of voice assistant creation. The new APIs, pre-trained models, and readily available resources make building sophisticated and engaging voice assistants more accessible than ever before. By leveraging these advancements, developers can focus on innovation and user experience, rather than getting bogged down in intricate technical details. Ready to start building your own effortless voice assistant? Explore the OpenAI platform and unlock the potential of this transformative technology today!

Featured Posts

-

Hungary Central Bank Faces Fraud Claims Key Findings From Index

Apr 26, 2025

Hungary Central Bank Faces Fraud Claims Key Findings From Index

Apr 26, 2025 -

The Importance Of Middle Management A Valuable Asset

Apr 26, 2025

The Importance Of Middle Management A Valuable Asset

Apr 26, 2025 -

Srovnani Cen Potravin Velikonoce Vs Bezny Tyden

Apr 26, 2025

Srovnani Cen Potravin Velikonoce Vs Bezny Tyden

Apr 26, 2025 -

Boosting Global Ties Indonesia Considers Exporting Unique Rice Strains

Apr 26, 2025

Boosting Global Ties Indonesia Considers Exporting Unique Rice Strains

Apr 26, 2025 -

80

Apr 26, 2025

80

Apr 26, 2025

Latest Posts

-

Federal Agency Appoints Anti Vaccination Advocate To Lead Autism Research

Apr 27, 2025

Federal Agency Appoints Anti Vaccination Advocate To Lead Autism Research

Apr 27, 2025 -

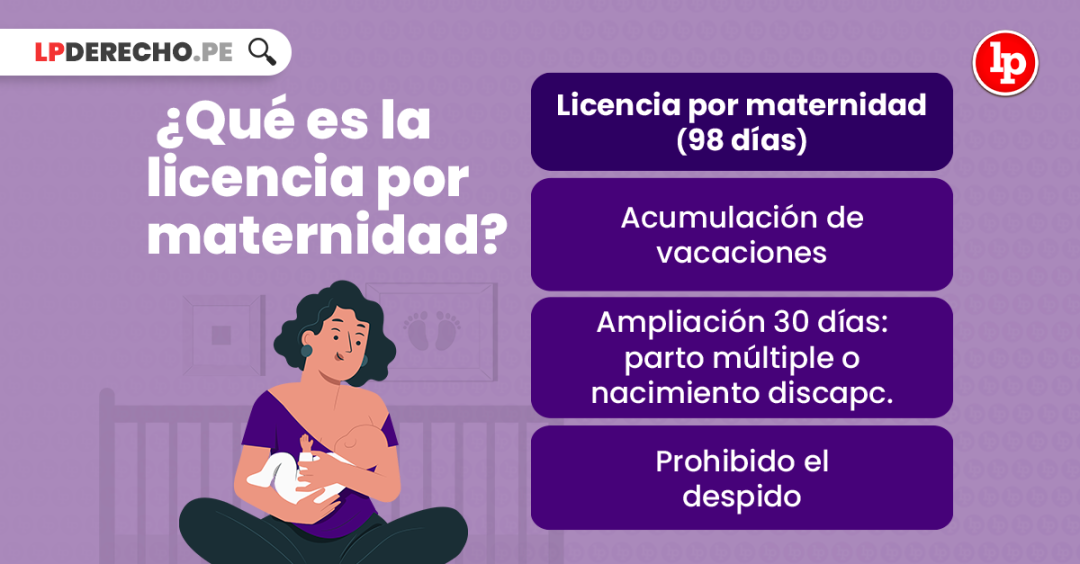

Un Ano De Licencia De Maternidad Remunerada Para Las Tenistas De La Wta

Apr 27, 2025

Un Ano De Licencia De Maternidad Remunerada Para Las Tenistas De La Wta

Apr 27, 2025 -

Tenistas Wta Pago Completo Durante Un Ano De Licencia De Maternidad

Apr 27, 2025

Tenistas Wta Pago Completo Durante Un Ano De Licencia De Maternidad

Apr 27, 2025 -

Licencia De Maternidad De Un Ano Para Tenistas Wta Un Avance Historico

Apr 27, 2025

Licencia De Maternidad De Un Ano Para Tenistas Wta Un Avance Historico

Apr 27, 2025 -

Wta Establece Licencia De Maternidad De Un Ano Para Sus Tenistas

Apr 27, 2025

Wta Establece Licencia De Maternidad De Un Ano Para Sus Tenistas

Apr 27, 2025